Think of quality assurance (QA) for products. Security assurance plays a similar, yet distinct, role for systems and applications and, yes, now also for AI.

It's all about building high confidence that your security features, practices, and controls are actually doing their job and enforcing your security policies. It's a cross-functional effort that ensures AI products are not just built securely, but continue to run securely. This isn't just about patching vulnerabilities; it's about a continuous cycle of improvement!

Security assurance, traditionally a cross-functional effort to ensure high confidence in security features and policies, now plays an even more vital role in the AI era. Much like quality assurance in manufacturing, security assurance meticulously examines both the finished AI products and the processes that create them. It aims to identify gaps, weaknesses, and areas where security controls might not be operating as intended, driving continuous improvement across all security domains. This proactive approach helps build confidence that AI software is not only built securely but continues to operate securely in the face of evolving threats.

The Fantastic Four: Core Security Assurance Functions

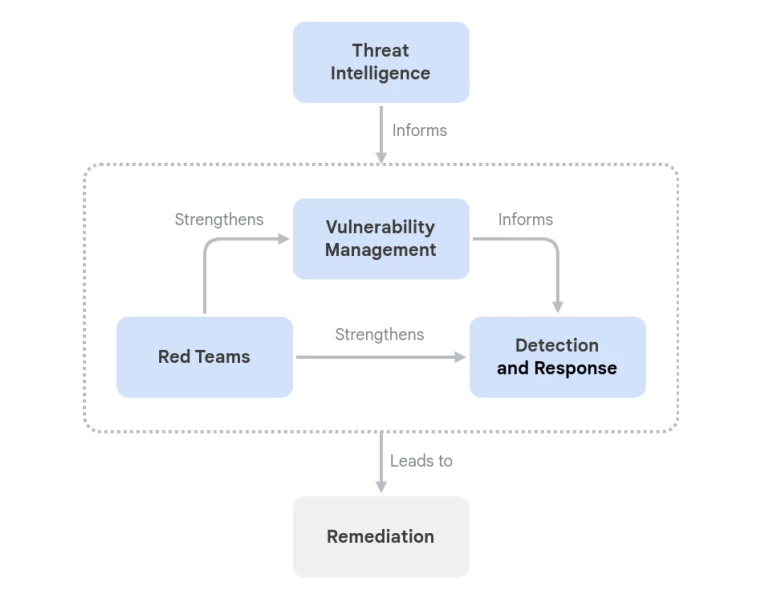

A new publication from Google highlights five key areas that work together like a well-oiled machine to keep AI systems secure:

- Vulnerability Management: The tireless detectives constantly scanning for known weaknesses across infrastructure, code, and AI-specific systems. They're the first line of defense!

- Detection & Response (D&R): The rapid-response team that springs into action when malicious activities bypass existing controls. They're monitoring for suspicious activities and ready to contain and neutralize threats.

- Threat Intelligence: The external world-watchers, analyzing adversaries, their motives, and their evolving techniques. They provide crucial insights to help anticipate and defend against future attacks.

- Red Teaming: The friendly hackers who proactively attack their own systems to test defenses and D&R's ability to respond. They simulate real-world attacks to strengthen security posture.

Let’s extract some of the lessons your teams can use today.

#1 Red Teaming

Red Team testing is essential for proactively securing AI systems. It involves applying adversarial techniques to identify and fix flaws in infrastructure, applications, processes, and technologies. The scope now includes data poisoning and tricking models, in an attempt to bypass existing filters and uncover new attack vectors, like erratic model behavior or data leaks. Red teams must also audit the AI supply chain, including components like PyTorch and data provenance.

AI's non-deterministic nature, especially in large language models, complicates traditional automated testing, making red team testing more critical. Agentic AI systems, with their autonomous use of digital tools and APIs, are vulnerable to prompt injection attacks, requiring red teamers to understand both technical and psychological nuances. End-to-end testing of the entire system, including AI model integration and agentic interactions with APIs, is crucial to uncover vulnerabilities.

Best Practices for Red Teams:

- Simulate Real-World Scenarios: Red teams should simulate attacks that threat intelligence teams are currently observing, as well as anticipate and execute attacks that adversaries might attempt in the future.

- Collaborate with D&R Teams: Work closely with Detection & Response teams to ensure attacks can be swiftly spotted and shut down, and to validate or identify potential gaps in detection capability.

- Inform Vulnerability Management: Findings and insights from red team exercises should be analyzed as candidates for vulnerability management, and detection and response automations and monitoring, informing secure design considerations for future product iterations.

- Focus on Conceptual Manipulation: Emphasize how AI systems can be manipulated at a conceptual level, beyond just technical vulnerabilities.

#2 Threat Intelligence

AI also changes the domain of threat intelligence. Threat Intelligence looks to the external world, analyzing an ever-evolving cast of threat actors to provide insights into current and future risks. Ai changes the balance here, and affects both sides.

Threat Intelligence must now expand its focus beyond traditional malware to encompass AI-specific threats, such as the theft of model weights or the misuse of GPUs for crypto-mining. A robust threat intelligence function is also essential for understanding the adversarial misuse of AI, helping teams identify potential attack targets and how bad actors might abuse a company's own AI capabilities for nefarious ends.

Furthermore, threat intelligence remains a key input for Red Teams, ensuring their work is informed by real-world scenarios and enabling them to create new adversarial test scenarios. While this function doesn't dramatically change with AI, its scope must certainly increase to cover the full spectrum of AI threats.

Best Practices for Threat Intelligence Teams:

- Stay Up-to-Date on AI Threat Landscape: Continuously analyze threat actors, their motives, and the specific techniques they are using and developing in the context of AI.

- Collaborate with D&R and Red Teams: Provide insights to Detection & Response teams to ensure they can defend against relevant threats, and inform rRed tTeams to help them simulate real-world attacks.

- Analyze External Adversaries: Understand the goals and methods (Tactics, Techniques, and Procedures, or TTPs) of external adversaries, especially concerning AI systems and AI in attacker tool chains.

- Review and Include SAIF AI Risks: Incorporate the Secure AI Framework (SAIF) AI risks into threat modeling.

#3 Detection and Response

Detection and Response (D&R) focuses on malicious actions that have bypassed existing controls. It acts as a monitoring layer, constantly looking for suspicious internal and external activities, and is prepared to quickly act on threats. AI clearly affects both the attacker and defender craft here (AI for detection and AI for better evasion)

The primary adaptation for Detection and Response (D&R) teams in the age of generative AI is an Expanded Scope of Suspicious Activity—it's no longer just about a weird login, but also a model behaving erratically or leaking private data. Consequently, Monitoring AI-Specific Activities has become essential; detection must now watch for unauthorized access to sensitive data, unusual traffic patterns, suspect code execution, unauthorized or questionable file modifications, and attempts at exfiltrating AI intellectual property.

To address the new risks, a critical element is responding to novel threats, which requires response teams to be prepared to contain and neutralize threats specific to AI systems using processes regularly practiced through red team exercises. This need for preparedness necessitates closer collaboration with red teams, as D&R teams must work closely with them to ensure attacks can be swiftly spotted and shut down, validate detection capabilities, and facilitate better AI skills and knowledge sharing.

Best Practices for Detection & Response:

- Establish a Baseline for AI Systems: Once you have a clear understanding of your AI assets and systems, analyze potential attacker paths to understand how your AI system can be attacked or used for malicious purposes. This will form the basis for testing detection and response mechanisms.

- Build Targeted Detection Logic: Develop targeted detection logic that identifies access attempts by looking for anomalous patterns outside the "baseline" for APIs used to access models.

- Practice Incident Response with AI Scenarios: Regularly practice response processes through red team exercises that simulate AI-specific attacks to ensure swift and effective threat containment and neutralization.

- Inform Secure Design: Findings and insights from red team exercises should inform secure design considerations for future product iterations, including D&R automations and monitoring.

- Collaborate with Threat Intelligence: Utilize insights from tThreat iIntelligence to ensure D&R teams can defend against relevant threats and to inform the development of new detection capabilities.

#4 Vulnerability Management

Vulnerability management continuously scans for known vulnerabilities (OS, applications, custom code, OSS, etc.) across the entire infrastructure, including storage systems, code repositories, and AI-specific components like data, training, testing systems, and data recipes.

Vulnerability management now requires several key adaptations for the generative AI era. First, there is an expanded scope of vulnerabilities, necessitating continuous scanning for known weaknesses across AI-specific systems, which includes AI data, training, and testing systems, as well as data recipes. A second critical focus is securing the model inference environment to prevent it from becoming a potential attack surface. Furthermore, addressing the AI software supply chain is paramount, as vulnerabilities in open-source AI libraries and frameworks like TensorFlow and PyTorch create cascading risks for AI systems, requiring their integration into vulnerability management processes.

This is compounded by novel model storage formats that can embed arbitrary code, creating significant security risks when models are downloaded from untrusted sources, a new class of vulnerability that must be addressed. Finally, data poisoning—a significant and challenging threat due to the vast size and public domain origins of training datasets—needs to be addressed through robust data provenance and access control.

Best Practices for Vulnerability Management:

- Continuous Scanning: Continuously scan for known vulnerabilities across all infrastructure, including AI-specific systems.

- Focus on Novel Attacks: Ensure that automatic and robust reporting and remediation of common vulnerabilities free up rRed tTeams to concentrate on novel and unique attacks.

- Integrate AI Supply Chain: Catalog AI infrastructure that uses third-party or open-source code and integrate it fully into vVulnerability mManagement processes.

- Secure Model Storage: Prioritize the use of safer model storage formats like Safetensors that prevent arbitrary code execution, thus reducing whole classes of vulnerabilities.

- Closely Collaborate with Red Teams: Utilize findings and insights from Rred tTeam exercises as candidates for vulnerability management and to inform secure design considerations for future product iterations.

Read the paper for complete guidance.