Blog Authors:

RK Neelakandan - Google Health Quality and Safety Engineering Lead

Bhavana Bhinder - Google Cloud, Office of CISO HCLS Europe Lead

The promise of AI in life sciences is immense—accelerating discovery, optimizing manufacturing, and ultimately, improving patient outcomes. But while much of the focus is on powerful models like Gemini, the reality in a regulated environment is simple: your AI is only as trustworthy as the data it’s built upon.

For leaders in GxP environments, deploying AI isn't just a technical challenge; it's a compliance imperative. This is a practical guide for building the robust data foundation your AI needs, ensuring you can innovate with confidence on Google Cloud. We’ll show you how to create the auditable, high-integrity data ecosystem required to satisfy regulators like the FDA.

It can be helpful to think of security, privacy and compliance of AI systems by looking at the elements that make up typical AI use cases: infrastructure that serves the model and protects the data and applications, data that is used to both fine tune models and complement interactions with models through retrieval augmented generation, application protections that ensure that what the users interact with is well designed and behaves as intended, and finally, models represented by their model weights, that bring generative capabilities to applications.

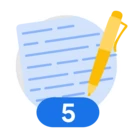

Data: 3 Critical Questions for Your GxP Data

Before you begin any AI project, you must first qualify your data. Asking these three questions will help you identify gaps and prepare your data for a compliant workflow.

1. How can you prove your data is reliable and has not been tampered with?

Data integrity and robust quality management form the bedrock of compliance. For auditors, this necessitates demonstrating an immutable, traceable history, a process significantly streamlined by Google Cloud's comprehensive capabilities, including Compliance Manager.

- Your Action Plan for Data Integrity:

- ✅ Do you have an immutable record of all data changes? BigQuery's time-travel feature lets you query historical versions of your data, providing a clear record of its state at any point in time. For longer-term immutable records and more granular tracking, Cloud Audit Logs provide a comprehensive record of all administrative and data access activity within BigQuery. You can export these logs to BigQuery for long-term retention and analysis, and BigQuery's table change history features can track row-level modifications.

- ✅ Is access to raw data strictly controlled and logged? Google Cloud's IAM (Identity and Access Management) lets you define granular, role-based access, while Cloud Audit Logs create a comprehensive record of who accessed what, and when—a key principle for regulations like 21 CFR Part 11.

2. Does your data accurately reflect your real-world processes and patient populations?

An AI model is a mirror of its training data. If that data is skewed, the model's decisions will be, too. This is a major focus of the EU AI Act's, with emphasis on fairness and the mitigation of bias, and a key consideration for regulatory bodies like the European Medicines Agency (EMA) in their reflection paper on AI in the medicinal product lifecycle. This also extends to ensuring appropriate separation and validation of training and test datasets to prevent overfitting and ensure real-world applicability.

- Your Action Plan for Data Accuracy:

- Use tools like BigQuery ML or Vertex AI Workbench to perform exploratory data analysis (EDA) comparing those data to internal data set standards.

- Profile your datasets to identify and address gaps. For example, if clinical trial data underrepresents a specific demographic, that is a risk that must be documented and mitigated before it is used to train a patient-facing model. Ensure your data preparation includes strategies for robust validation against independent datasets, mirroring real-world scenarios.

3. Is your data ready for advanced models like Gemini?

State-of-the-art models like Gemini can understand complex, multimodal data—from clinical notes and batch records to microscopy images. To unlock this potential, your data must be well-organized and of high quality.

- Your Action Plan for AI Readiness:

- Use Dataplex to create a "data readiness scorecard." Profile your data sources and set up automated data quality checks that flag issues like missing fields, inconsistent formatting, or unexpected values before the data is used for model training.

Infrastructure: Architecting Your Governed Data Environment

Once you've assessed your data, you can build the environment to house and govern it.

Step 1: Centralize and Secure Your Data in BigQuery

Your first step is to establish a single source of truth. Create dedicated BigQuery datasets for your GxP-relevant data (e.g., clinical_data_gcp, manufacturing_data_gmp). Apply fine-grained IAM policies to these datasets, ensuring that data scientists have read-only access while data stewards have modification rights. This secure, centralized, least privileged approach provides the technical safeguards to support your compliance with data privacy laws like the U.S. Health Insurance Portability and Accountability Act (HIPAA) and Europe's GDPR.

Step 2: Catalog and Govern with Dataplex

Point Dataplex at your BigQuery datasets. This will automatically catalog your tables and allow you to enrich them with business metadata. Your team can now tag columns with clear definitions like "Batch Record ID" or "Patient Cohort," creating a common language across the organization. This is also where you implement the automated data quality rules from your readiness scorecard, ensuring your data remains clean over time.

Step 3: Visualize the Audit Trail with Data Lineage

The lineage graph in Dataplex is your compliance superpower. It automatically maps the entire journey of your data. This visual audit trail is invaluable for demonstrating to regulators from the FDA or under EU Directives the exact, traceable path your data took from its raw source to its use in an AI model.

Applications: Building a Trustworthy Agent

With a governed data foundation, you can now build intelligent agents with confidence. Let's consider a practical use case: a Deviation Triage Agent for a GMP facility.

- Ground your agent in truth: Connect Vertex AI Agent Builder directly to your governed BigQuery dataset of historical deviation reports. This ensures the agent's knowledge is limited to your controlled, high-quality information.

- Leverage Gemini's reasoning: Use a Gemini model as the agent's reasoning engine. It can analyze the unstructured text of new deviation reports, compare them to historical patterns, and suggest a classification and severity level.

- Ensure verifiable outputs: The agent's response should always be traceable. When it suggests a classification, it must also provide a direct link back to the source deviation report in your database. This "citation" is critical for human oversight and validation.

Models: Maintaining Control with an AI Asset Registry

After deployment, the continuous operation of every model, agent, AI system and process must be managed through a formal change control process.

Vertex AI Model Registry acts as your central registry for these AI assets. The process is simple and powerful:

- When your team develops an improved version of the triage agent, you register it in the Model Registry.

- This action automatically versions the model and links it to the validation results, the dataset it was tested on, and the person who approved it.

- You now have a complete, auditable change control record, perfectly aligning with the structured lifecycle management expected in GxP environments.

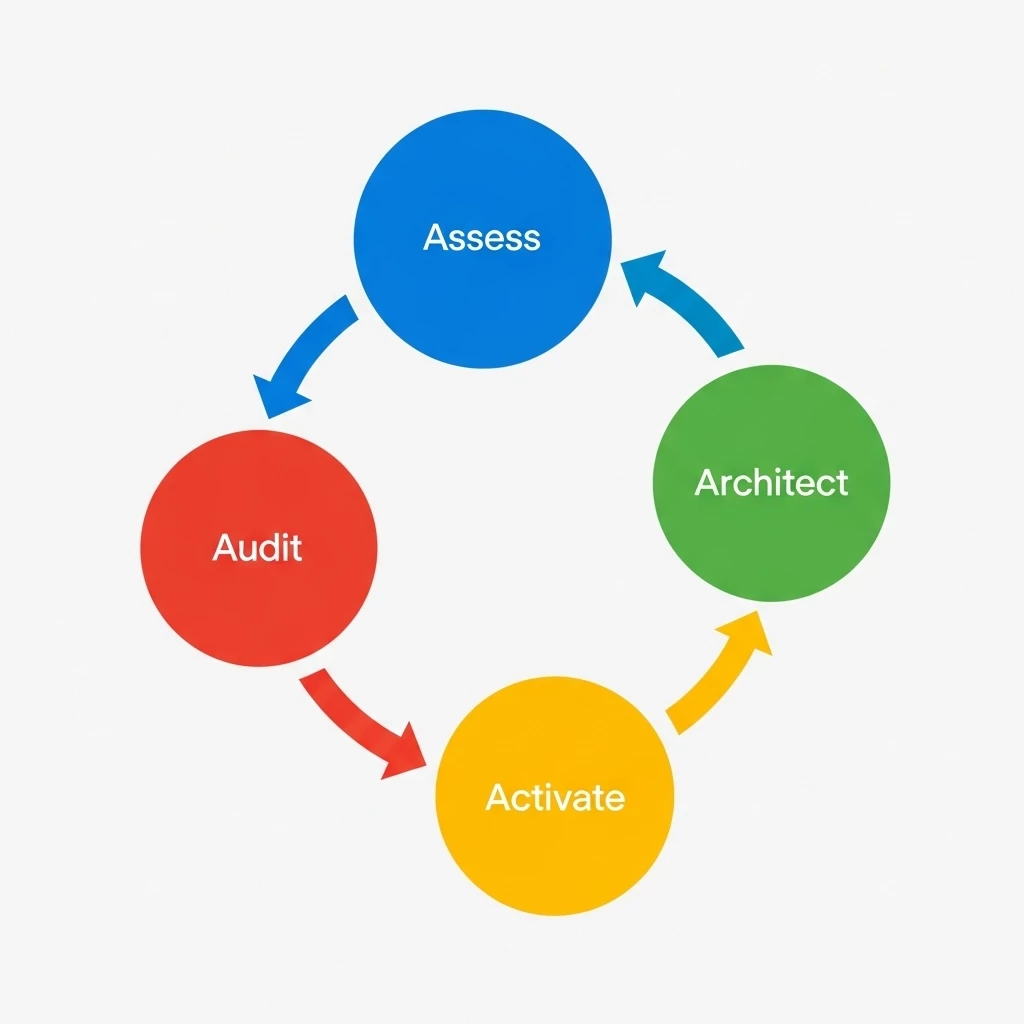

Your Action Plan for Compliant AI

Moving from possibility to practice is about taking clear, deliberate steps.

- Assess your data using the three critical questions to identify and mitigate risks early.

- Architect your governed data foundation with BigQuery and Dataplex.

- Activate your data with a grounded, traceable agent built on Gemini and Vertex AI.

- Audit and manage your AI assets through their entire lifecycle with the Model Registry.

By following this practical approach, you can build an AI ecosystem that is not only powerful and innovative, but also compliant, auditable, and fundamentally trustworthy.