Blog Authors:

Vesselin Tzvetkov, Principal Security Engineer

Anthony Lazzaro, Strategic Cloud Engineer

Canburak Tumer, Strategic Cloud Engineer

Security Operations Centers (SOCs) are critical for monitoring and responding to threats, but in manufacturing environments, they face unique hurdles. Manufacturing relies heavily on Operational Technology (OT) systems using diverse, often proprietary or binary protocols to control Programmable Logic Controllers (PLCs) or and other industrial equipment, like for example S7 protocol, Modbus TCP etc. Traditional IT-focused SOCs often lack visibility into this OT world. As IT, OT, Internet of Things (IoT), and Industrial IoT (IIoT) systems become increasingly interconnected, like connected vehicles or smart manufacturing lines, the attack surface expands, making comprehensive security monitoring essential. However, bridging the historical divide between IT and OT security monitoring, especially when dealing with non-standard data formats, remains a significant challenge.

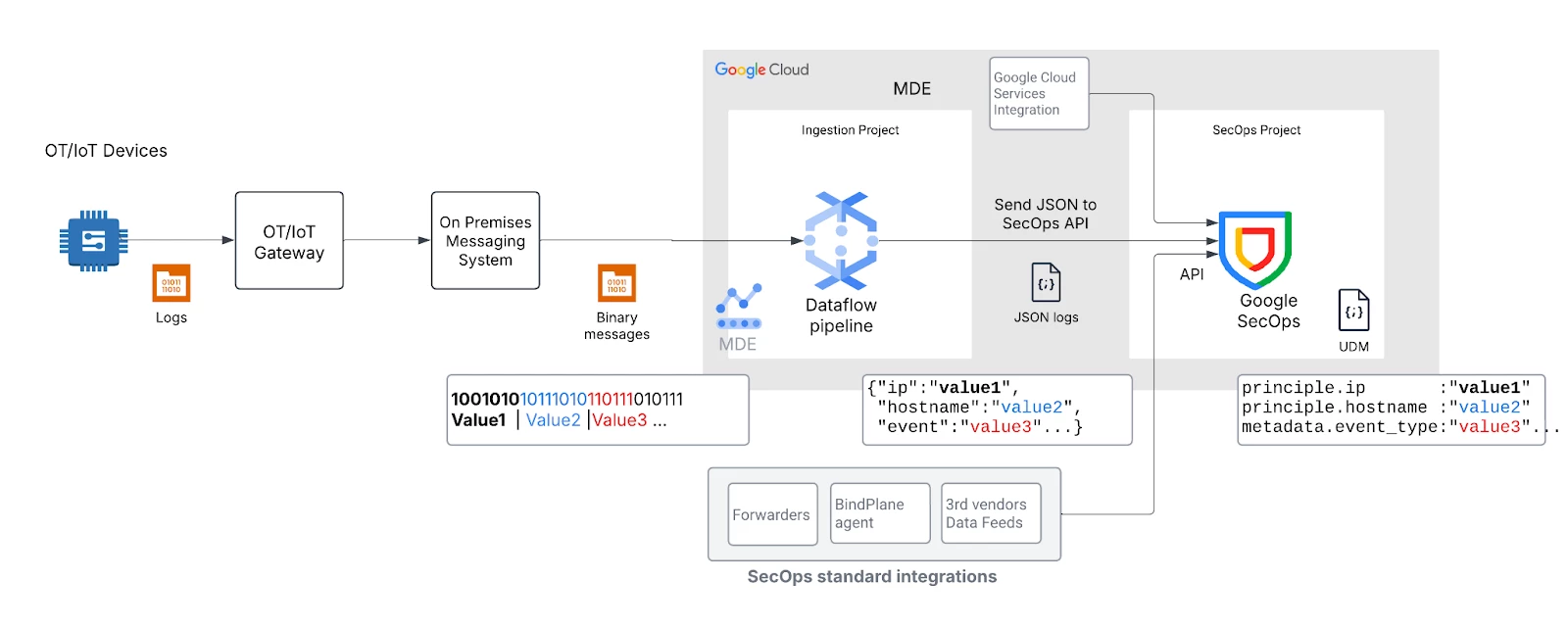

Many manufacturing enterprises are turning to Google Cloud Security Operations (SecOps) to build a unified SOC. This blog post outlines a practical approach we've implemented to ingest and utilize non-standard binary, manufacturing logs within Google SecOps. The key is leveraging the power and flexibility of Google Cloud Dataflow as a real-time data transformation pipeline.

Step 1: Define Security Use Cases Collaboratively

Effective monitoring starts with clear goals. Begin by selecting 2-3 high-impact security use cases, like following Primary Mitigations to Reduce Cyber Threats to Operational Technology | CISA. A close collaboration between your SOC team (threat hunters, analysts), security architects & engineers, and manufacturing/automation/OT engineers from the outset is critical. The teams have different knowledge in OT and security and all together are needed to complete the high quality use cases.

- Conduct Threat Modeling: Jointly identify risks and potential threat scenarios applicable to your manufacturing environment.

- Prioritize Threats to Critical Assets: Focus your initial efforts on addressing threat scenarios leading to most serious risks and impacting most critical assets, such as safety systems, production-critical assets, or transversal infrastructure systems (e.g., access control and authentication systems, OT hypervisor, etc.).

- Identify Available Data & Expertise: Consider other prioritisation factors such as log data availability or ability/availability of OT engineers to provide context on the specific protocols and baseline system behavior. Log sources that typically have high security values related to authentication, access, connection, and configuration changes.

- Define Use Cases: Clearly document the what and why of monitoring – vendor-agnostic descriptions of suspicious activities or conditions that require security attention (e.g., unauthorized PLC program changes, unusual communication patterns).

- Engage Equipment Vendors: Once use cases are defined, work with experts or equipment vendors to ensure the necessary logs can be generated and accessed. To ensure vendor support, it is important to engage them during the selection and purchasing process. This preemptive approach is necessary because collecting system-level security logs might necessitate contractual vendor agreement to support such access and may even involve product changes or other additional effort on their part.

Step 2: Standardize message with the Unified Data Model (UDM)

Google SecOps supports hundreds of log types sources and many response integrations. The raw logs are ingested in JSON or text format and during the Google SecOps parsing translated to Unified Data Model (UDM) data. The UDM provides a fast and vendor agnostic way of handling events in rules and searches.

If the messages to be ingested in Google SecOps are in a binary format, they need to be translated to JSON or text format first. Dataflow service will help you build that bridge for binary data transformation prior to ingestion into Google SecOps. Executing pipeline on Dataflow allows decoding & transformation to happen at massive scale, keeping up with the entire volume of data from your OT systems. In the pipeline, you need to define how the relevant information from the binary format maps to the JSON message. This mapping acts as the blueprint for your data transformation, ensuring that Google SecOps can ingest and correlate data regardless of its original source. In the parser of Google SecOps you define how JSON data map to the UDM structure and you can use the same UDM fields for multiple vendors.

Step 3: Build the Bridge with Dataflow

The main service you will use in the approach is Dataflow, a Google Cloud service that provides unified stream and batch data processing at scale. A real-time pipeline is first defined in Apache Beam using its SDKs and then it is executed on Dataflow. Google SecOps has an API which allows ingestion of log events. In the pipeline defined in Apache Beam you import messages via Google SecOps APIs. Dataflow I/Os allow reading from the messaging systems interlinking your IT, OT, IoT or IIoT infrastructure. For example there is ready support for Solace, Kafka & Pub/Sub.

Google SecOps provides plenty of options to collect non binary logs, like Forwarders, BindPlane agent, Ingestion API, Google Cloud integration, Data feeds. For OT/IoT focused end-to-end solutions that deliver scalable and seamless connectivity between the factory floor and Google Cloud, customers can use Manufacturing Data Engine (MDE) and partner solutions.

Step 4: Advanced Use Cases

Once the initial pipeline is established, you can iteratively expand your coverage, enabling:

- Richer Context: Ingest additional OT data sources by replicating the Dataflow pattern.

- IT/OT Correlation: Dive deeper by correlating OT events (e.g., unusual machine activity) with IT logs already in SecOps (e.g., employee badge access times, network activity). This unified view enables more sophisticated threat detection scenarios that span both domains and helps reduce the number of false positive alerts.

- Updated Vendor Requirements: Insights gained may highlight the need for specific logging capabilities from manufacturing equipment vendors in the future.

By leveraging Google Cloud Dataflow alongside Google SecOps, manufacturers can overcome the challenge of integrating non-standard OT data into their security monitoring strategy. This approach enables the streaming and normalization of critical manufacturing logs into UDM, facilitating real-time alerting and analysis within Google SecOps. The result is a convergence of IT and OT security operations, leading to significantly improved visibility, threat detection capabilities, and overall security posture across the entire manufacturing environment.