Today we are going to revisit a use case using data tables in Google Security Operations (SecOps). A few months back, David French demonstrated how to write a rule that added a Google Workspace user and attributed resources to a data table that could then be used for other hunts or detections.

At the time, David noted that the write_row capability was only available for use with rules. With the latest update to data tables, write_row can be used in searches as well! With this update, I felt like it was a good time to revisit this capability and discuss the concept of key columns as it pertains to data tables.

Using Search with write_row

Before we apply write_row, let’s introduce the search we will use today. In our search, we want to identify the process launch events in our tenant that contain a file tag of persistence. These file tags are based on the file hashes in the event stream and are enriched using tags that are available in VirusTotal.

The search is using regular expressions to extract the file name of the executable from the target.process.file.full_path, and we are aggregating (grouping) our results by the combination of the hostname, sha256 file hash and the file name. Finally we are calculating the time when this combination was last seen by using the max aggregation function and the timestamp.get_timestamp function.

metadata.event_type = "PROCESS_LAUNCH"

principal.hostname = $hostname

target.process.file.sha256 != ""

target.process.file.sha256 = $sha256

re.regex(target.process.file.full_path, `\.exe$`)

re.capture(target.process.file.full_path, `([^\\\\/]+\.exe)$`) = $file

target.process.file.tags = "persistence"

match:

$hostname, $sha256, $file

outcome:

$last_seen = timestamp.get_timestamp(max(metadata.event_timestamp.seconds))

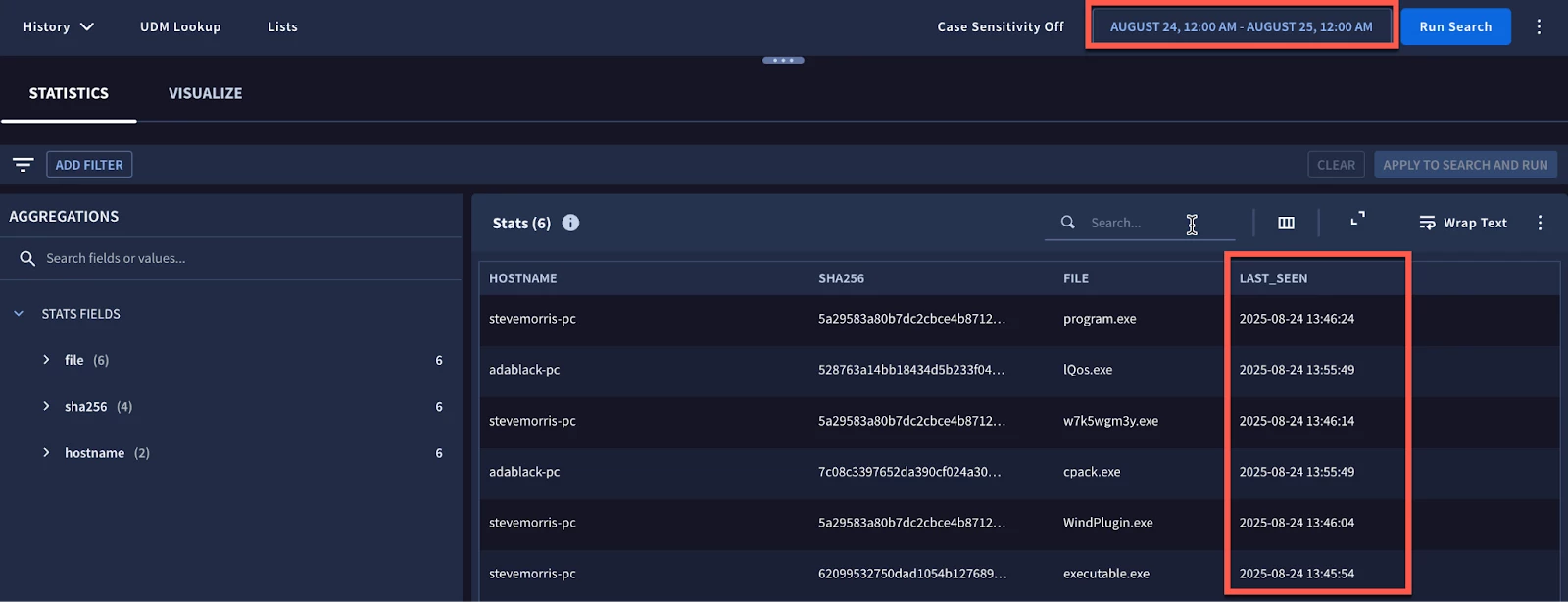

Here are the results based on the search run on August 21, 2025. In this example we can see six events that have a unique combination of hostname, file hash and file name.

Now, we are going to take the search results and write them to a data table. The concept of write_row is very straightforward and is handled the same way in a search as in a rule. It is the last section in the search and contains the name of the data table and a mapping of the data table fields to the results in the search.

metadata.event_type = "PROCESS_LAUNCH"

principal.hostname = $hostname

target.process.file.sha256 != ""

target.process.file.sha256 = $sha256

re.regex(target.process.file.full_path, `\.exe$`)

re.capture(target.process.file.full_path, `([^\\\\/]+\.exe)$`) = $file

target.process.file.tags = "persistence"

match:

$hostname, $sha256, $file

outcome:

$last_seen = timestamp.get_timestamp(max(metadata.event_timestamp.seconds))

export:

%persistence_host_hash.write_row(

hostname : $hostname,

sha256: $sha256,

file_name: $file,

last_seen: $last_seen

)

Notice that nothing else has changed in the search except the addition of this export section.

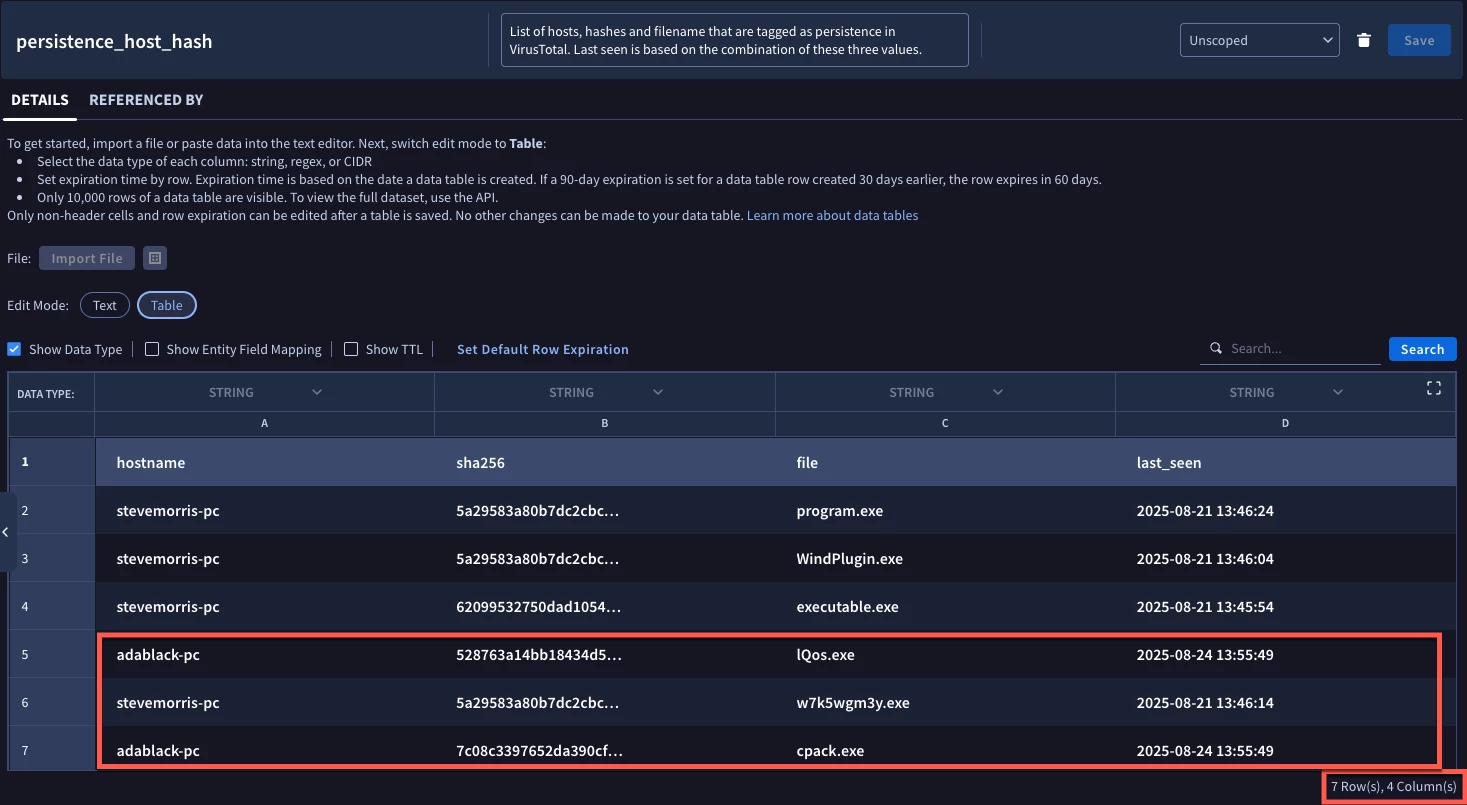

When we run the search, the data table is created and the values from the search are written as you can see above. This behavior mirrors what David discussed in his rule example. So far, so good.

A Potential Pitfall?

I’m going to introduce a wrinkle. It’s a few days later and I want to run the same search for a different time range. This time the search is run to identify events on August 24, 2025.

For this time range, we have a small result set of six events and because we have the write_row functionality at the end of the search, we are expecting that data to get appended in the data table.

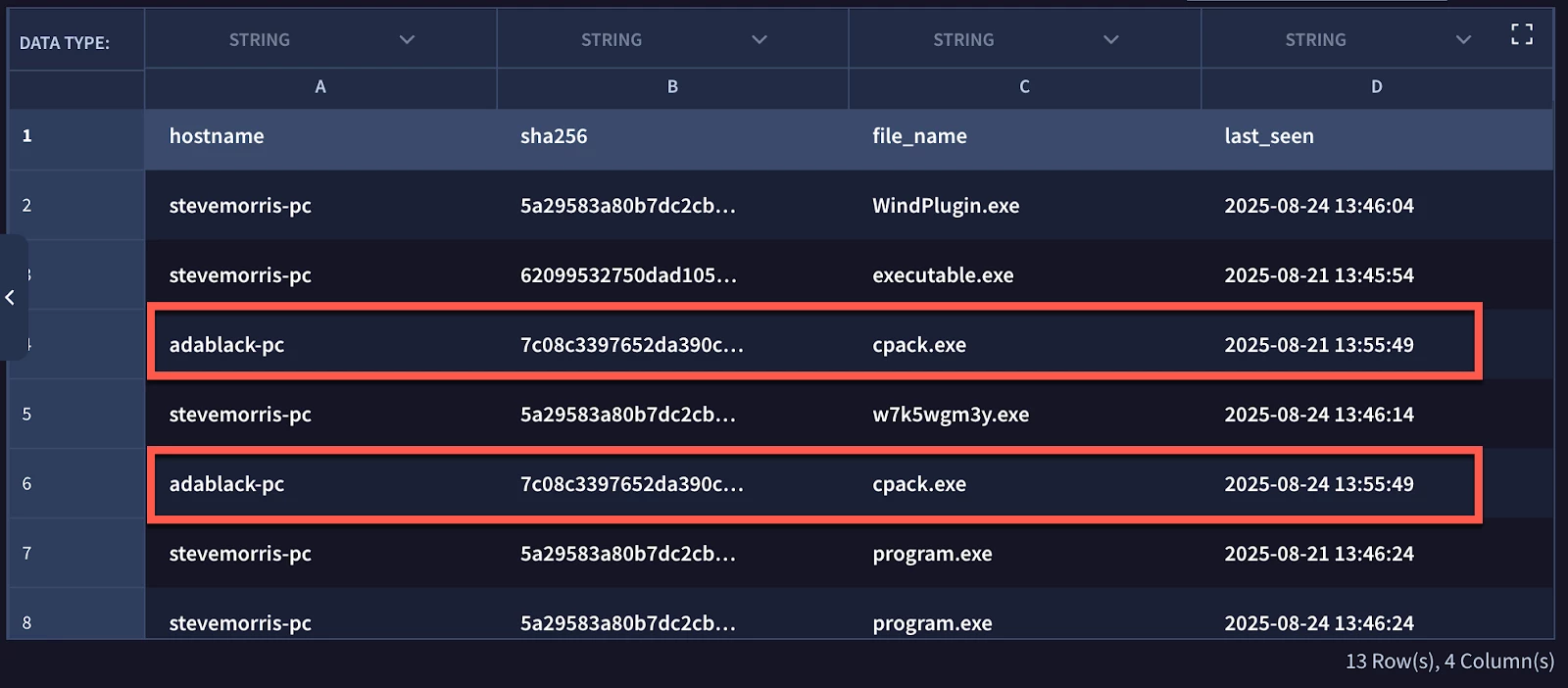

When we open the data table we can see that adablack-pc has the same hash and filename displayed twice with two different last_seen dates. This might be totally acceptable for the use case or it might be flat wrong, it depends on how you expected the data table to behave.

Customizing the Key Columns in a Data Table

When a data table is created, key column(s) that make up the primary key are defined. In the initial search that created a data table and when data tables are created within the UI, the key columns will be all columns. This means all additional write_row functions will write out new rows unless all fields in all the columns are identical to an existing entry in the data table.

In some cases, that might be acceptable, but for this use case it isn’t quite what we had in mind. Here we want a single entry for each combination of hostname, file hash and file name and then the last seen value is updated as appropriate. To do this, we need to create the data table ahead of time so that the search can take advantage of it. Wait, did I say search? I meant both search and rule because they function in the same manner.

Here is the curl command that can be used to create a data table where the key columns are not all of the columns. Make sure you grab your GCP project number and customer ID. This data table is named persistence_host_hash and the description is provided as well.

curl -X POST 'https://us-chronicle.googleapis.com/v1alpha/projects/<gcp project number>/locations/us/instances/<customer id>/dataTables?dataTableId=persistence_host_hash' \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

-d '

{

"name": "projects/<gcp project number>/locations/us/instances/<customer id>/dataTables/persistence_host_hash",

"description": "List of hosts, hashes and filename that are tagged as persistence in VirusTotal. Last seen is based on the combination of these three values.",

"column_info": [

{

"column_index": 0,

"original_column": "hostname",

"key_column": true,

"column_type": 1

},

{

"column_index": 1,

"original_column": "sha256",

"key_column": true,

"column_type": 1

},

{

"column_index": 2,

"original_column": "file",

"key_column": true,

"column_type": 1

},

{

"column_index": 3,

"original_column": "last_seen",

"column_type": 1

}

]

}

'

Notice in the curl command that each column is defined with an index starting at 0, a name and type. Type defines if the column is a string, regular expression, CIDR or number. In this case, all four fields are strings. The one difference here is that the first three columns are also defined with a key_column value of true where the final column, last_seen is not.

If we formatted our API call correctly, our results look like this:

{

"name": "projects/<gcp project number>/locations/us/instances/<customer id>/dataTables/persistence_host_hash",

"displayName": "persistence_host_hash",

"description": "List of hosts, hashes and filename that are tagged as persistence in VirusTotal. Last seen is based on the combination of these three values.",

"createTime": "2025-08-25T16:33:19.423416869Z",

"updateTime": "1970-01-01T00:00:00Z",

"columnInfo": [

{

"originalColumn": "hostname",

"keyColumn": true,

"columnType": "STRING"

},

{

"columnIndex": 1,

"originalColumn": "sha256",

"keyColumn": true,

"columnType": "STRING"

},

{

"columnIndex": 2,

"originalColumn": "file",

"keyColumn": true,

"columnType": "STRING"

},

{

"columnIndex": 3,

"originalColumn": "last_seen",

"columnType": "STRING"

}

],

"dataTableUuid": "432fd5b76bc85aa8a7b7a6a4f0de32ac"

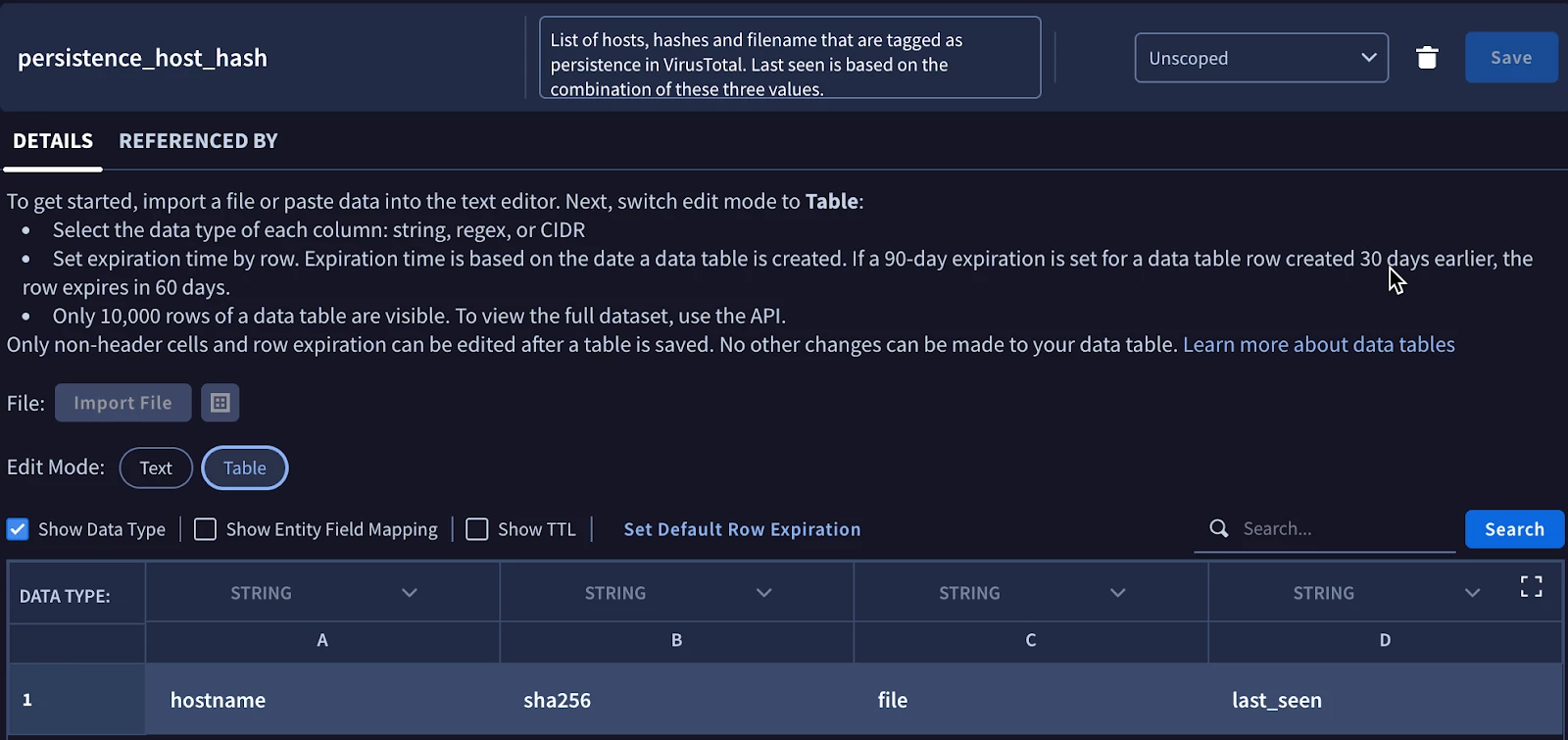

When we open the UI, we can validate that the data table appears as we would expect it. Notice the data types are all string, the table is empty and the description is populated.

Putting Your New Data Table To Work

Let’s put the newly created data table to work. We are going to run the first search for August 21, 2025 again which will result in six rows created.

I could now run the search for August 24, 2025 and show you the data table, but I bet someone might think that I just ran the second search on an empty data table and I want to prove that isn’t the case. Instead, we are going to make a slight modification to the search and only search for events on the host named adablack-pc. Based on the previous search we ran on August 24, 2025, this should result in two events.

metadata.event_type = "PROCESS_LAUNCH"

principal.hostname = "adablack-pc"

principal.hostname = $hostname

target.process.file.sha256 != ""

target.process.file.sha256 = $sha256

re.regex(target.process.file.full_path, `\.exe$`)

re.capture(target.process.file.full_path, `([^\\\\/]+\.exe)$`) = $file

target.process.file.tags = "persistence"

match:

$hostname, $sha256, $file

outcome:

$last_seen = timestamp.get_timestamp(max(metadata.event_timestamp.seconds))

export:

%persistence_host_hash.write_row(

hostname : $hostname,

sha256: $sha256,

file: $file,

last_seen: $last_seen

)

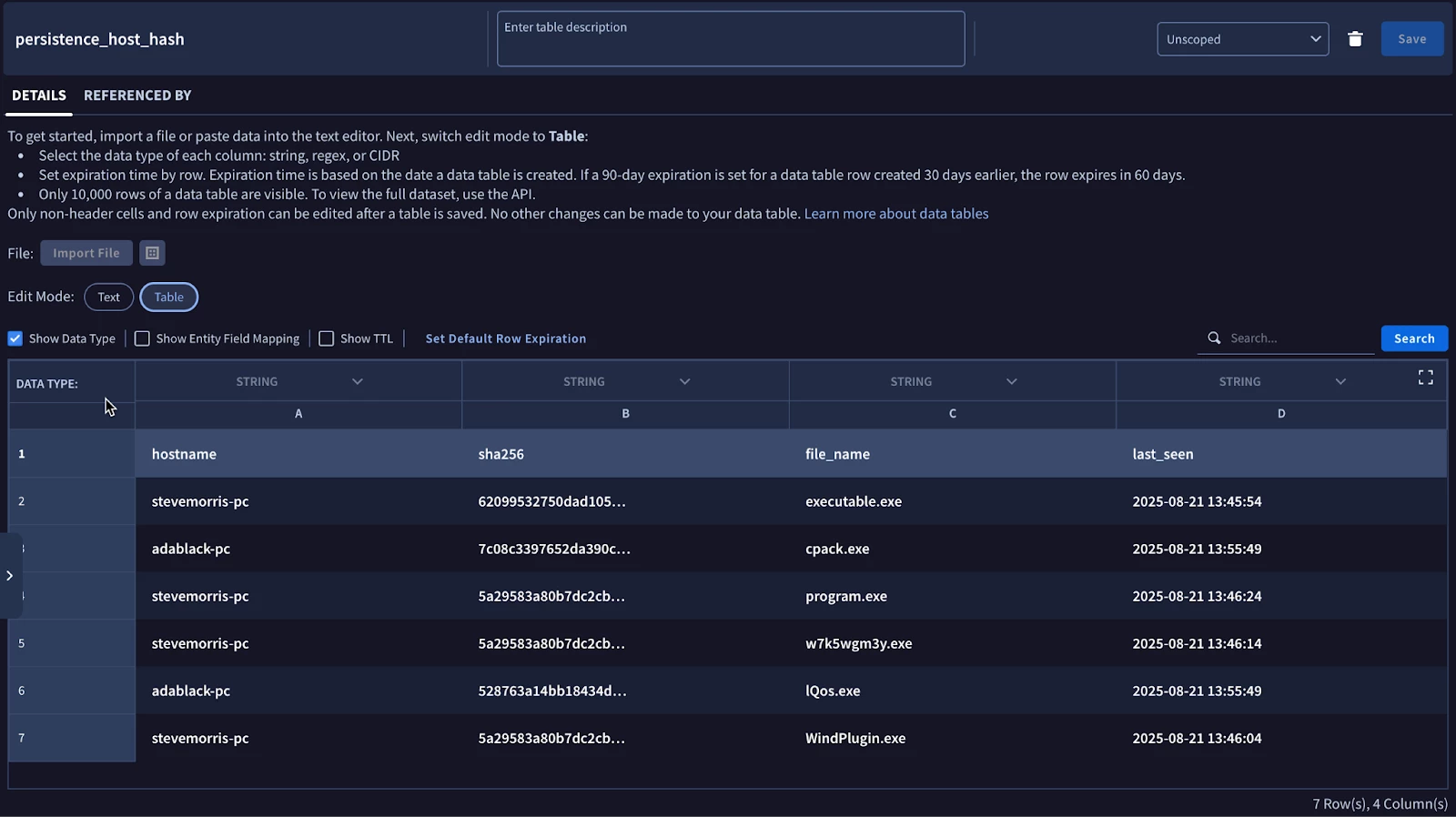

When we run this search, we can confirm that only two rows returned on that combination of hostname, file hash and file name and that those updated the last login for only that user.

Now, let’s take a look at the data table. Notice that the data table has seven rows in it. One is the row header. The other six are events. Four of these are for the hostname stevemorris-pc, which all have a last_seen value of August 21, the last time a search ran that met criteria that fit the hostname of stevemorris-pc.

The two rows for the hostname adablack-pc have the same file hash and name as what was in the August 21 results and because those three columns are the key, the last_seen column has been updated to August 24 for those two rows in the data table.

With that, here are a couple of tips to keep in mind:

- write_row can be used for BOTH search and rules

- The export section where write_row is called is the last section in the search or rule

- Key columns are defined at table creation

- Currently the best method to define the key columns is through the API. Data tables created at search or rule run time or via the UI will assume all columns are key

- To map columns using write_row, specify the data table column name followed by the match or outcome variables in the search

The write_row function, along with the ability to define key columns, expands the use cases that data tables can be used for, whether that is through a rule or a search. I hope this highlights how write_row can be used to add additional findings to data tables which can then be used for threat hunting, detection engineering and more in Google SecOps!