Co-Author: Omri Shamir

A sudden flood of logs isn't just noise, it's a warning. It could be the first sign of a brute-force attack, a misconfigured application spewing errors, or a system about to fail. The challenge is spotting this surge before it becomes a crisis, especially when it's from a single source. In this article, we'll build a practical detection in Google Security Operations that automatically flags any log type when its daily volume suddenly spikes 50% or more above its normal daily average.

Our approach to building this alert is a simple but powerful three step process.

First, we will configure a native dashboard widget in Google Security Operations to act as our "detector." This widget will perform the core analysis, continuously comparing each log type's 24 hour volume against its 7 day daily average and outputting a list of any sources that breach our 50% threshold. Second, we will create a Google Security Operations playbook to act as our "alerter." This playbook will be configured to read the output from the widget and automatically take action to generate a custom alert.

Finally, we'll create a custom connector in Google Security Operations to act as our "scheduler," automatically triggering the playbook to run this check once every day.

Stage 1 - Building the query

Let's start by building the engine for our detector: the query.

Our goal is to create a native dashboard widget that continuously scans our log sources and outputs a list of any that are experiencing a significant spike.

This query needs to do four things for each log type:

- Filter for the right logs.

- Calculate the average daily ingestion over the last 7 days.

- Calculate the total ingestion over the last 24 hours.

- Compute the ratio between them.

Filtering and Grouping

First, we need to define our data source. We are interested in logs coming through the Ingestion API into Google Security Operations. We also want to exclude noisy or irrelevant log types, like FORWARDER_HEARTBEAT. We then tell the query to group all calculations by the log type.

ingestion.log_type != ""

ingestion.log_type != "FORWARDER_HEARTBEAT"

ingestion.component = "Ingestion API"

$log_type = ingestion.log_type

match:

$log_type

Calculating the 7 Day Average

Next, in the outcome block, we'll calculate our 7 day baseline. We sum all ingestion.log_volume over the widget's time range (which should be set to 7 days), convert the total from bytes to Gigabytes (by dividing by 1024 * 1024 * 1024), and then divide by 7 to get our daily average. We'll round it to two decimal places for a clean output.

$daily_average = math.round(sum(ingestion.log_volume) / (1024 * 1024 * 1024) / 7, 2)

Calculating the Last Day's Ingestion

Now we need to calculate the volume for only the last 24 hours. Since we can't run a second query on the output of the first one (multi stage feature will allow it), we'll use a clever workaround with a conditional if statement inside the sum function.

We first need to calculate the timestamp for 24 hours ago: (timestamp.current_seconds() - 86400). Then, the if statement checks if an event's ingestion.start_time is after that timestamp. If it is, its log_volume is added to the sum; otherwise, 0 is added. This lets us sum only the last day's logs within the same 7 day query.

$last_day = math.round(sum(if (ingestion.start_time >

(timestamp.current_seconds() - 86400), ingestion.log_volume , 0))

/ (1024 * 1024 * 1024) / 1, 2)

Putting It All Together

When we combine these steps and add the final $ratio calculation, we get our complete query.

ingestion.log_type != ""

ingestion.log_type != "FORWARDER_HEARTBEAT"

ingestion.component = "Ingestion API"

$log_type = ingestion.log_type

match:

$log_type

outcome:

$last_day = math.round(sum(if (ingestion.start_time > (timestamp.current_seconds() - 86400), ingestion.log_volume , 0)) / (1024 * 1024 * 1024) / 1, 2)

$daily_average = math.round(sum(ingestion.log_volume) / (1024 * 1024 * 1024) / 7, 2)

$ratio = $last_day / $daily_average

condition:

$last_day > 0 and $daily_average > 0 and $ratio > 1.5

order:

$ratio desc

The condition block is key:

- $last_day > 0 and $daily_average > 0: This is a safety check to prevent division by zero errors and to ensure we're only looking at active log sources.

- $ratio > 1.5: This is our main trigger. This is the mathematical way of saying "the last day's volume is 50% greater than the 7 day average." (A ratio of 2.0 would mean a 100% spike, or double the volume).

When you save this in a Google Security Operations dashboard widget, you'll get a clean table showing any log source that is currently spiking, sorted by the most severe spike first.

Stage 2 - Building the playbook

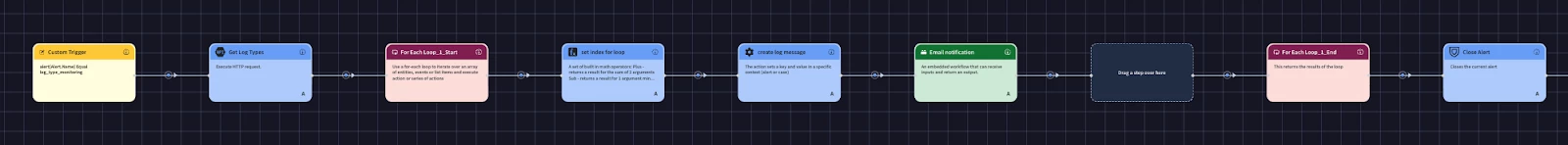

Now that our "detector" widget is ready, we need to build our "alerter" to make that data actionable. We'll create a Google Security Operations Playbook that automatically runs our widget, checks the results, and then generates the custom alert.

Step 1: Configure the Trigger

The first step in any playbook is its trigger. For this use case, we will select a Custom Trigger. This type of trigger allows us to define a custom schema for the data that starts the playbook. We'll circle back to connect this at the end, but for now, just add it as the starting point.

Step 2: Get the Widget Data via API

Our first action is to get the results from the dashboard widget we built in Part 1. The playbook needs to "run" that query on demand to get the latest data. To do this, we'll use the Google Cloud API integration.

Add the “Execute HTTP request” action and configure it with the following details:

- Method: POST

- URL Path:

https://<region>-chronicle.googleapis.com/v1alpha/projects/<projects>/locations/<region>/instances/<instances>/dashboardQueries:execute

(Note: You must replace the placeholders like <region>, <projects>, and <instances> with your specific Google Security Operations environment details.)

- Headers:

{

"Content-Type": "application/json; charset=utf-8",

"Accept": "application/json",

"User-Agent" : "GoogleSecOps"

}

- Body Payload:

{

"query": {

"name": "projects/<projects>/locations/<region>/instances/<instances>/dashboardQueries/<queryid>"

},

"filters": [],

"usePreviousTimeRange": false,

"clearCache": true

}

There are two critical parts to this payload:

- name: You must replace <query_id> with the unique ID of the dashboard query you created in Part 1. You can typically find this ID in the URL when you are editing the dashboard widget.

- clearCache: true: This flag is essential. It forces the query to rerun against the latest data every time the playbook is triggered, ensuring we never act on stale information.

When this action runs, it will execute our query. The JSON object output containing our list of spiking log sources (if any) will now be available for the next steps in the playbook.

Step 3: Loop Through the Results

We'll add a Loop action to our playbook. This action will take the list of log types from the previous action and run the subsequent actions one time for each log type.

Configure the loop with the following parameters:

- Loop over: List

- Items: [Get Log Types that has Parsing Rate issue.JsonResult| "response_data.results.values.value.stringVal"]

- Delimiter: ,

Here's what this configuration does:

The Items field points to the JSON output from our previous action.

By setting Loop over to List, the playbook will now execute any actions nested inside this loop one by one for each log type in the list. If the action returns no results (meaning no logs are spiking), the list will be empty, and the loop will simply be skipped, generating no alerts.

Now that we have our loop set up, we'll add actions inside it. These actions will run once for each spiking log type that the loop finds, allowing us to process each one individually.

Step 4: Adjusting the Loop Index

First, we need to solve a common data massaging problem. The Loop.Index variable is 1 based (it starts at 1, 2, 3...), but JSON arrays and lists are 0 based (they start at 0, 1, 2...). To pull the correct data from our JSON response, we must first subtract 1 from the loop's index.

Add a Functions - Math arithmetic action with these parameters:

- Function: Sub

- Arg 1: [Loop.Index]

- Arg 2: 1

We can rename this step to, “set index for loop”, as we'll use its output (the new 0 based index) in the very next step.

Step 5: Creating the Dynamic Alert Message

With our correct 0 based index, we can now pull the specific log type name and its corresponding spike data from our API call's JSON output. We'll store this information in the Alert Context, which makes it available for the final notification.

Add a Set Context Value action and configure it as follows:

- Scope: Alert

- Key:

[Get Log Types.JsonResult | "response_data.results.values.value.stringVal"| getByIndex("[set index for loop.ScriptResult]")]- Value:

Today's ingestion volume of the following log type : [Get Log

Types.JsonResult |

"response_data.results.values.value.stringVal"| getByIndex("[set

index for loop.ScriptResult]")] is [Get Log Types.JsonResult|

"response_data.results.values.value.doubleVal"| getByIndex("[set

index for loop.ScriptResult]")] above the average daily volume of

the last seven days.(Note: Get Log Types is the name of our action from Step 2. If you named your action differently, be sure to use that name in your variables).

Let's break this down:

- Key: We are dynamically setting the context key to the log type name itself (e.g., WINEVTLOG). We use the getByIndex function to pull the correct log type from the stringVal array using our 0 based index.

- Value: We are building a human readable string for our alert. This string also uses getByIndex to pull the stringVal (the log type name) and the doubleVal (the calculated ratio) into the message.

This action will run for every item in the loop, creating a separate, dynamic message for each spiking log source. Now that we have our messages, the final step inside the loop is to send them to our desired notification channel (like email, a new ticket, or a chat message).

Stage 3 - Triggering the Playbook on a Schedule

We have our "detector" query (Part 1) and our "alerter" playbook (Part 2). The final piece of the puzzle is to make the playbook run automatically every day. We will do this by creating a Custom Connector that ingests a "dummy" alert once per day in Google Security Operations, which will serve as our playbook's trigger.

Step 1:

From the Power Ups menu, find and configure a Connectors integration. This connector's sole purpose is to create a simple, predictable alert at a regular interval.

Set the following parameters:

- Run Every: 1 Days

- Product Field Name: product_name

- Event Field Name: event_type

- Alert fields: {"product_name":"log_type_monitoring" , "event_type":"update"}

- Alert name: log_type_monitoring

This configuration will automatically ingest a new, simple alert with the name log_type_monitoring precisely once every 24 hours.

Step 2: Connect the Playbook Trigger

Now, let's go back to our playbook and open the Custom Trigger we added at the very beginning. We will configure this trigger to only start the playbook when it sees the specific dummy alert created by our new connector.

Set the trigger condition to:

alert name = log_type_monitoring

Conclusion: Your Automated Alert Is Live

And that's it! With this final trigger condition set, your entire solution is active and automated in Google Security Operations.

Let's recap the full workflow:

- Once every day, the Custom Connector runs and ingests a single dummy alert named log_type_monitoring.

- The playbook's Custom Trigger instantly sees this alert, matches the name, and begins execution.

- The playbook calls the Google Cloud API to run your dashboard query, fetching a list of any log sources spiking more than 50% above their 7 day average.

- If spiking sources are found, the Loop processes each one, builds a dynamic message, and generates your custom alert.

- Close alert

You now have a robust, automated system for detecting significant ingestion anomalies, helping you spot security events or operational issues the moment they start.

Now it's your turn. Test this framework in your own Google SecOps environment and see what you discover. We look forward to hearing your feedback and answering any questions in the comments.