There are a few ways for this use case, below is their pros and cons. I've provided the relevant search for one of these solutions.

Native Dashboard

Pros:

- Can query data over the entire retention period within your instance (usually 12 months)

- Schedule delivery of the contents via PDF, csv

- Based on Looker Embedded, with the flexibiltiy to use table calcualtions and custom measures

Cons

- Only a 5,000 row limit

- Difficult to implement query K/V pairs.

- Likely to be deprecated in the near future (assumption)

[preview] Stats and Aggregates Search & Preview Dashboards https://cloud.google.com/chronicle/docs/preview/search/statistics-aggregations-in-udm-search

Pros

- Ability to query 10,000 rows of data (more than native dashboards)

- Greater support in querying K/V pairs

Cons

- Only able to query 3 months worth of data

- Still in preview, likely to encounter bugs.

- When coupled with preview dashboards, currently unable to schedule delivery of the contents.

Example Search:

metadata.id = $MetadataID

extensions.auth.auth_details = $LogonType

metadata.log_type = "WINEVTLOG"

metadata.product_event_type = "4624"

$LogonType = "10" or $LogonType = "3"

security_result.action = "ALLOW"

match:

$MetadataID

outcome:

$Event_Timestamp = array_distinct(metadata.event_timestamp.seconds)

$Extensions_Auth_Auth_Details = array_distinct($LogonType)

$Principal_hostname = array_distinct(principal.hostname)

$Principal_ip = array_distinct(principal.ip)

$Principal_Process_Command_Line = array_distinct(principal.process.command_line)

$Target_Adminsitrative_Domain = array_distinct(target.administrative_domain)

$Target_User_Userid = array_distinct(target.user.userid)BigQuery

Pros:

- Able to query the entire dataset

Cons

- Depending on your infrastructure and enviornment, gaining access may be difficult and subject to your license.

As I read this, I am not entirely sure what you have in mind when you say present this to the analyst. Remote login events over 4624 I would assume happens quite frequently so presenting this in a dashboard or rule would be quite busy or noisy without additional tuning.

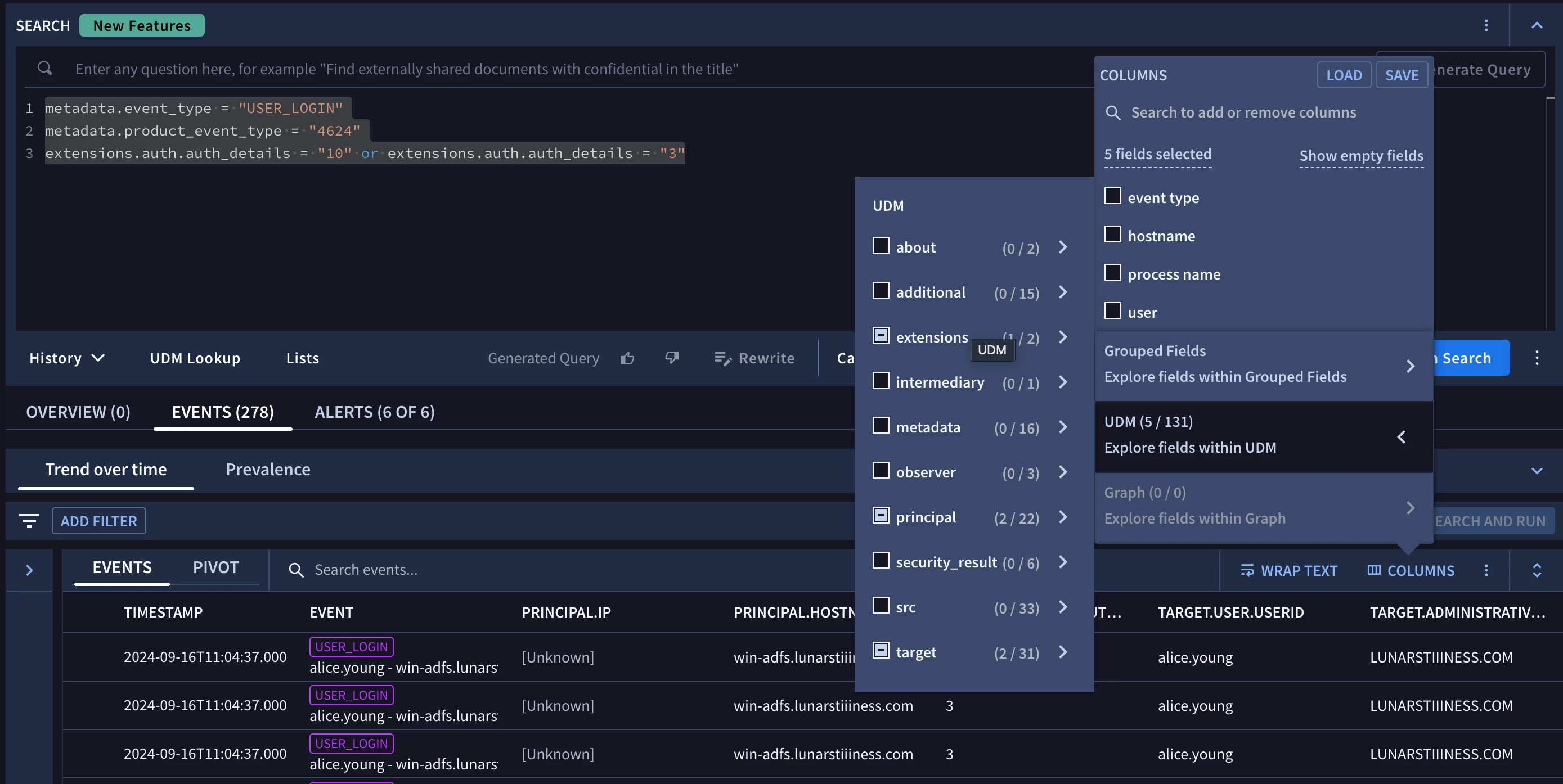

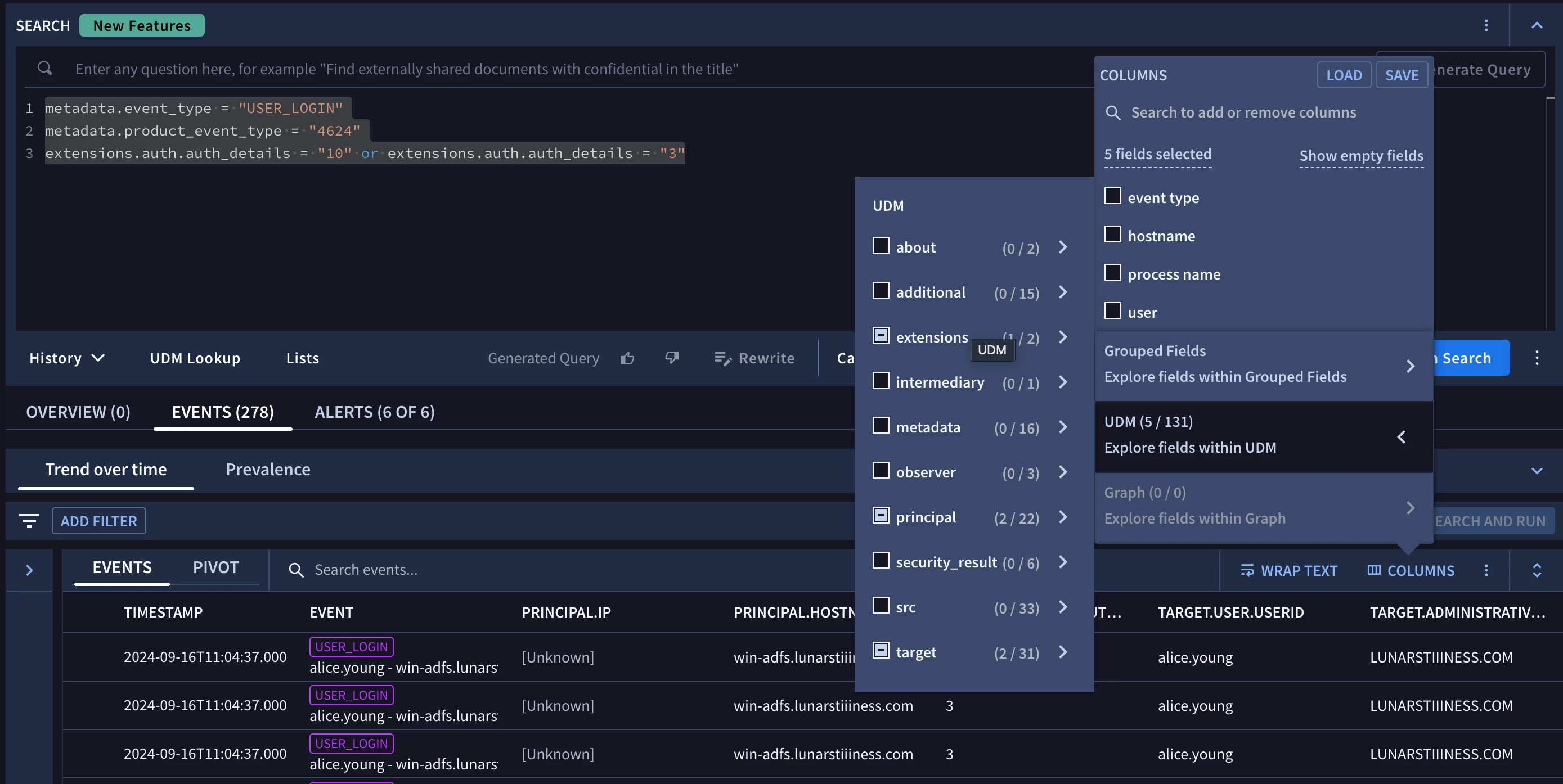

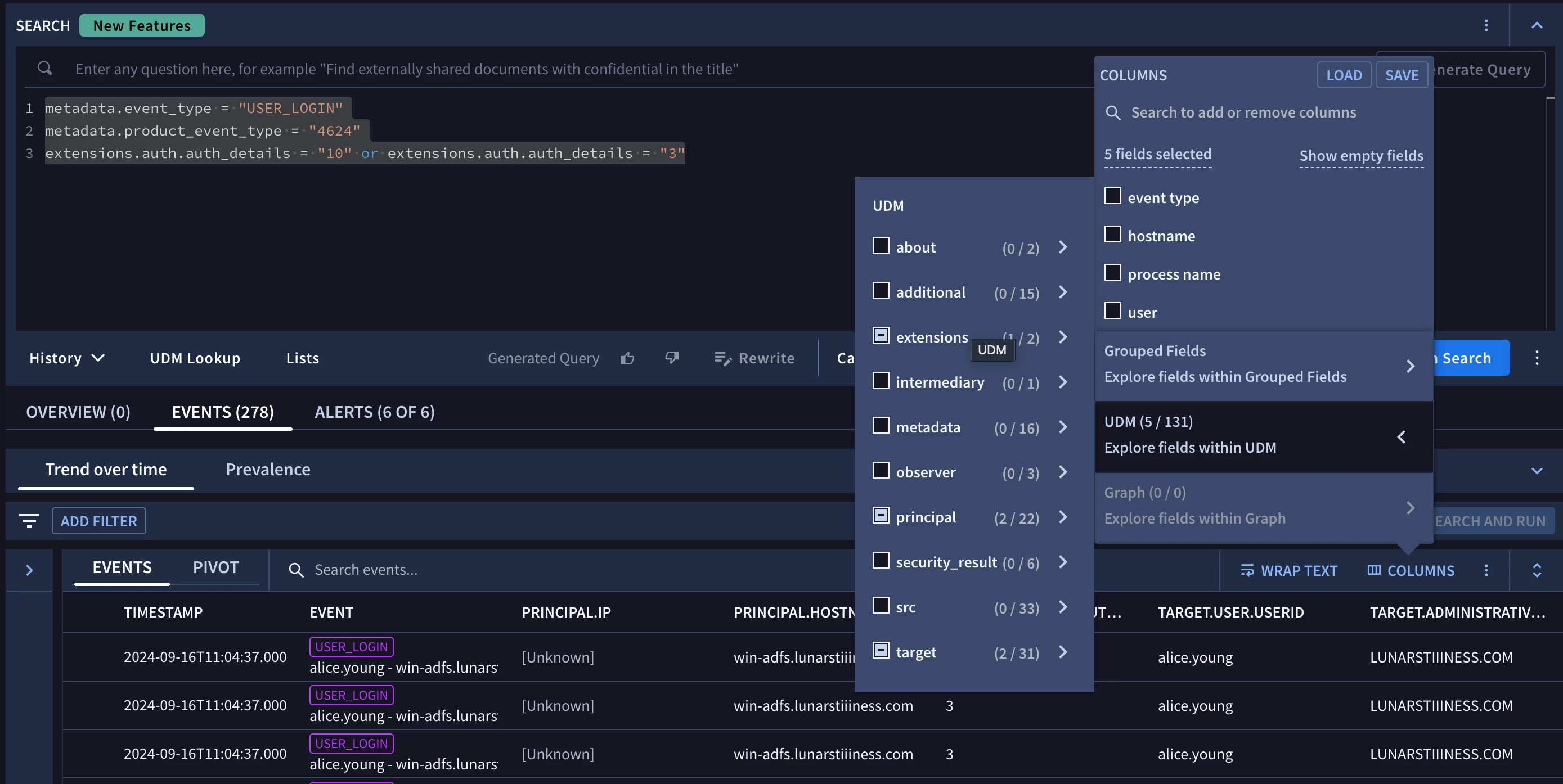

That leaves search which could easily be built with many of the search criteria you provided and then using the column selector to select the columns (and even save the column set) to be loaded for that search.

metadata.event_type = "USER_LOGIN"

metadata.product_event_type = "4624"

extensions.auth.auth_details = "10" or extensions.auth.auth_details = "3"

As I read this, I am not entirely sure what you have in mind when you say present this to the analyst. Remote login events over 4624 I would assume happens quite frequently so presenting this in a dashboard or rule would be quite busy or noisy without additional tuning.

That leaves search which could easily be built with many of the search criteria you provided and then using the column selector to select the columns (and even save the column set) to be loaded for that search.

metadata.event_type = "USER_LOGIN"

metadata.product_event_type = "4624"

extensions.auth.auth_details = "10" or extensions.auth.auth_details = "3"

@jstoner The analysts that I work with are used to being able to define a timeline in their SIEM UI, then run a query that returns data as values associated to fields which are columns in a table with each entry in the table being an event. They are moving over to Chronicle and have asked for me to help port over some of their existing queries. One of those queries is simply to find windows event logs with an event ID of 4624 and a remote Logon type, with some basic regex filtering against raw windows event logs using python. I am not sure what the best way is to replicate the presentation of that data, it seems like a saved search may be best, as the analyst can then manipulate the search result columns and apply filters. I was trying to use the table visualization in the dashboard creator but I was having mixed results due to the number of columns and fields.

The replication of the data columns in the UI at this time will be to use the column selector that I showed in the screenshot and allow them to save/load columns as needed. The concept of a table command where you pipe out specific column names is not something that we do currently, which may be part of the question they are asking. As for the regex, they can add regex to the search using regex functions to tune it further.

Happy to connect offline on this further if you would like...