Hey @tnxtr ,

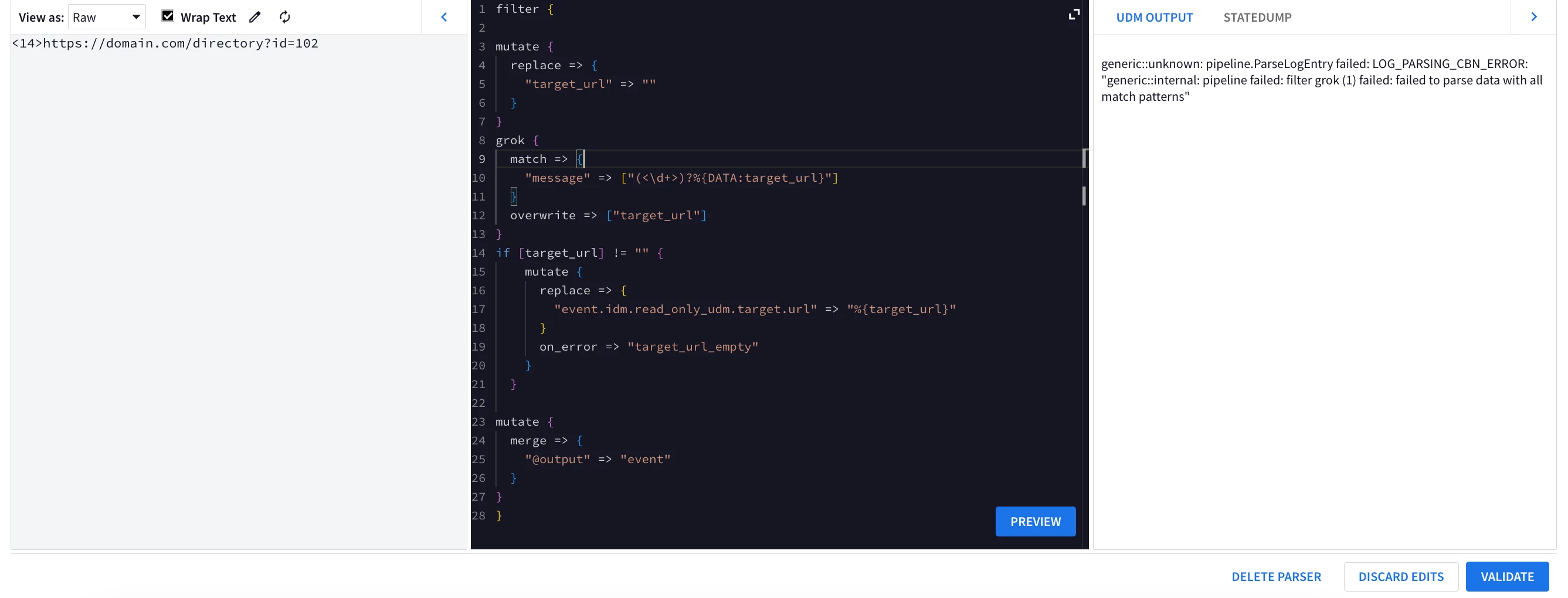

The error states your regex pattern is invalid and results in no matches.

One issue is you need to double escape your regex for matching digits.

E.g `\\d+` should be `\\\\d+`

GROK provides a bunch of precanned statements you can use - https://github.com/elastic/logstash/blob/v1.4.2/patterns/grok-patterns

You're also going to run into an issue since UDM requires some metadata. Here is an updated working parser snippit using the GROK pattern URI. I also set some placeholder metadata fields so you can see some output.

filter {

mutate {

replace => {

"target_url" => ""

}

}

mutate {

replace => {

"event.idm.read_only_udm.metadata.vendor_name" => "VEndor"

"event.idm.read_only_udm.metadata.product_name" => "Product"

"event.idm.read_only_udm.metadata.product_version" => "1.0"

"event.idm.read_only_udm.metadata.log_type" => "YOUR_LOG_TYPE"

"event.idm.read_only_udm.metadata.event_type" => "GENERIC_EVENT"

}

on_error => "zerror.replace_metadata"

}

grok {

match => {

"message" => ["(<\\\\d+>)%{URI:target_url}"]

}

on_error => "no_target_url"

overwrite => ["target_url"]

}

if [target_url] != ""{

mutate {

replace => {

"event.idm.read_only_udm.target.url" => "%{target_url}"

}

on_error => "target_url_empty"

}

}

mutate {

merge => {

"@output" => "event"

}

}

}

UDM output from above parser

metadata.event_timestamp"2025-01-23T15:16:35Z"

metadata.event_type"GENERIC_EVENT"

metadata.vendor_name"VEndor"

metadata.product_name"Product"

metadata.product_version"1.0"

metadata.log_type"YOUR_LOG_TYPE"

target.url"https://something.com"