Log Parsing Issue in Restricted Pipeline (Chronicle/CBN/Logstash)

We are encountering persistent syntax and parsing errors in a highly restricted log processing pipeline (likely based on Logstash/Grok, possibly within Google Chronicle or CBN). We need help structuring the code to handle the strict syntax and mixed log types without using advanced conditional logic.

1. Environment and Constraint

-

Environment: Highly restricted log parsing pipeline (cannot use standard Logstash features).

-

Confirmed Constraints (Key Failures):

-

NO

if/elseconditionals (if [field]fails,if "tag" in [tags]fails). -

NO semicolons (

;) allowed anywhere in the config. -

Field names must use snake_case (e.g.,

host_name).

-

2. The Core Problem: Mixed Log Types

Our pipeline receives two distinct log formats that crash the system when we try to parse the second one, or when we encounter simple syntax errors.

| Log Type | Sample Log (Raw) |

| Envoy Access Log (Primary Goal) | <166>2025-10-22T14:00:00.095Z S01PSSESXUCS055.mgmt.ad.usfs.llc envoy-access[2099405]: POST /hgw/host-14948/vpxa 200 via_upstream - 15887 243 gzip 8 8 0 10.9.10.94:49874 HTTP/1.1 TLSv1.2 10.9.36.55:443 127.0.0.1:40729 HTTP/1.1 - 127.0.0.1:8089 "3addb69f" "QueryBatchPerformanceStatisticsVpxa" |

| VMware ESX Log (Crashing Log) | <166>2025-10-21T22:25:49.901Z S01PDSESXUCS054. healthd[2100030]: [Originator@6876 sub=PluginLauncher] Launching binary: /usr/lib/vmware/healthd/plugins/bin/ssd_storage ++group=healthd-plugins,mem=40 -u http://!vmwLocalSocketHealthd |

3. The Final Attempted Code (for Envoy)

We are currently trying to deploy this final, single-Grok-based solution for the primary Envoy logs, which keeps failing due to hidden syntax issues:

Ruby

filter {

# 1. GROK: Parse the entire log line in one resilient step.

grok {

tag_on_failure => ["_grok_failure_envoy"]

match => {

"message" => "<%{POSINT}>%{SPACE}?%{TIMESTAMP_ISO8601:timestamp} %{DATA:host_name} %{DATA:process_detail}: %{WORD:http_method} %{URIPATH:http_path} %{NUMBER:http_status_code} %{DATA:route_status} - %{NUMBER:http_response_bytes} %{NUMBER:http_request_bytes} %{DATA:compression} %{NUMBER:duration_total} %{NUMBER:duration_upstream} %{NUMBER:duration_request} %{DATA:source_address} %{DATA:client_protocol} %{DATA:destination_address} %{DATA:upstream_address} %{DATA:upstream_protocol} - %{DATA:internal_address} %{DATA:request_id} %{GREEDYDATA:custom_operation}"

}

}

# 2. SEGMENTED GROK: Split IP:Port fields.

grok { source => "source_address"; match => { "source_address" => "%{IP:source_ip}:%{NUMBER:source_port}" } }

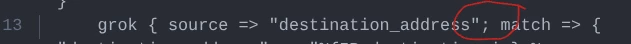

grok { source => "destination_address"; match => { "destination_address" => "%{IP:destination_ip}:%{NUMBER:destination_port}" } }

grok { source => "upstream_address"; match => { "upstream_address" => "%{IP:upstream_ip}:%{NUMBER:upstream_port}" } }

grok { source => "internal_address"; match => { "internal_address" => "%{IP:internal_ip}:%{NUMBER:internal_port}" } }

# 3. FINAL CLEANUP AND TYPE CONVERSION

mutate {

gsub => [

"custom_operation", "\"", ""

]

convert => {

"http_status_code" => "integer"

"http_response_bytes" => "integer"

"http_request_bytes" => "integer"

"duration_total" => "integer"

"duration_upstream" => "integer"

"duration_request" => "integer"

"source_port" => "integer"

"destination_port" => "integer"

"upstream_port" => "integer"

"internal_port" => "integer"

}

remove_field => [

"message", "POSINT", "source_address", "destination_address", "upstream_address", "internal_address",

"process_detail", "route_status", "compression", "client_protocol", "upstream_protocol", "request_id"

]

}

}

4. The Exact Error

The deployment is failing with a persistent syntax error, despite rigorous manual checks:

generic::invalid_argument: failed to create augmentor pipeline: failed to parse config: Parse error line 13, column 38 : illegal token ';'

5. Request for Help

-

Semicolon Hunter: Can anyone spot a hidden semicolon or an encoding issue in the long Grok pattern string that would cause the parser to see it at

line 13, column 38? -

Best Practice for No-Conditional Parsing: Given the constraint of NO conditionals, what is the best practice for deploying multiple Grok patterns to handle the two drastically different log types (Envoy vs. ESX) without the pipeline crashing on the first failure? Should we rely solely on

tag_on_failureandtag_on_success?