Hi,

I am trying to parse one single field in a log.

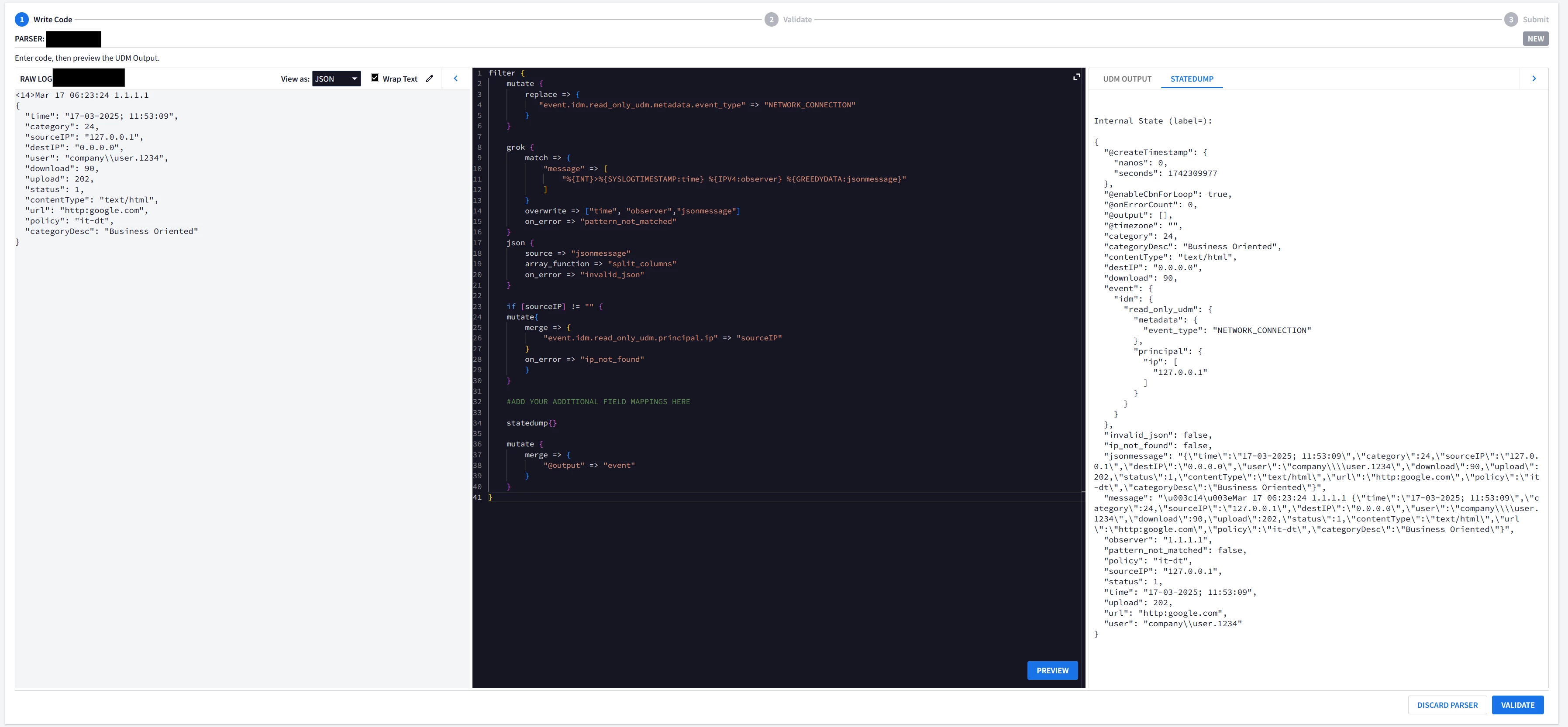

The sample log looks like this :

<14>Mar 17 06:22:24 1.1.1.1 {"time":"17-03-2025; 11:52:09","category":24,"sourceIP":"127.0.0.1","destIP":"0.0.0.0","user":"company\\\\user.1234","download":90,"upload":202,"status":1,"contentType":"text/html","url":"http:google.com","policy":"it-dt","categoryDesc":"Business Oriented"}

This is the parser that I have written for it :

generic::unknown: pipeline.ParseLogEntry failed: LOG_PARSING_CBN_ERROR: "generic::invalid_argument: failed to convert raw output to events: raw output is not []interface{}, instead is: string"