Hi - I'm writing SIEM documentation for my SOC and am struggling to quickly produce summaries of all the relevant (for us) field names and lists of corresponding values. In Splunk I'd | fieldsummary or | table * or some other options.

In SecOps SIEM UDM search I run searches on a logtype, review a set of results, create columns for my UDM search results, view, and download a CSV file of these results by my columns. Then I iterate on my logtype search with exclusions for the common field values I found and repeat.

Pitfalls of my approach are the restrictions on the number of result rows allowed and possible difficulties seeing the more rarely seen field values .

I have to iterate on the searches as SecOps SIEM searches have limits on the # of rows available in the results: i.e 1mm rows for UDM searches and 30k rows for statistics and aggregations searches. With these limits it's difficult to see the full distribution of values for high volume logs like firewall.

So I have to iteratively filter out in searches the most common values for fields of interest as the SecOps SIEM so I can continually reduce the results rows to keep them under the UDM results row limits.

But, filtering on the most common values for a field can cause me to miss uncommon values for other fields if they also occur in logs with the field values I filtered out.

There clearly is a solution within SecOps SIEM as evidenced by the existence of the "UDM Lookup" tab (image)...

...but UDM search cannot search on wildcards (tho I only tried * and .* ).

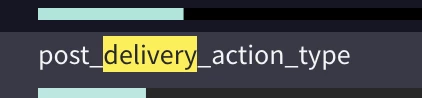

Also UDM Lookup cannot find or display some important fields: e.g. in gmail logs i.e. the sub fields of "additional field" or "label" types: about.labels["post_delivery_action"}.

This seems highly suboptimal. What am I missing?