Hello,

I’m looking for advice regarding the operation of Chronicle SecOps.

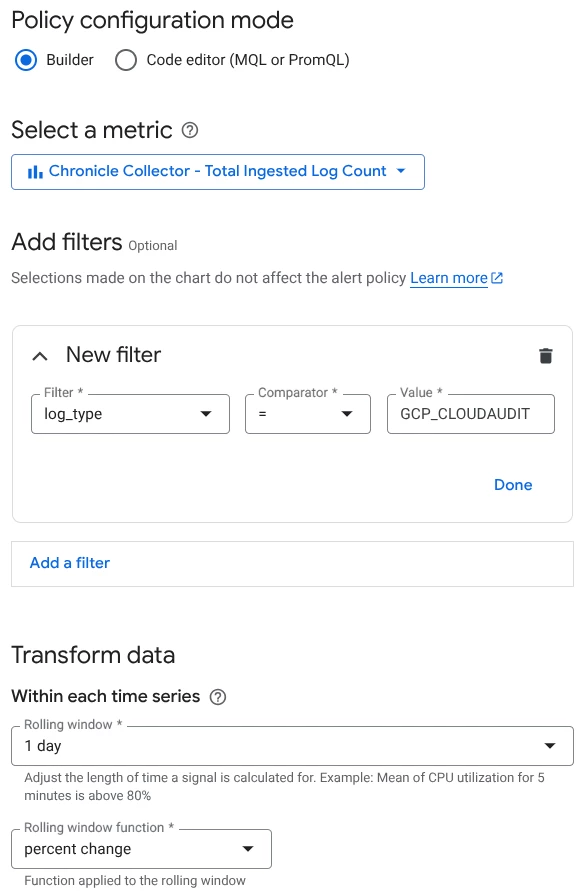

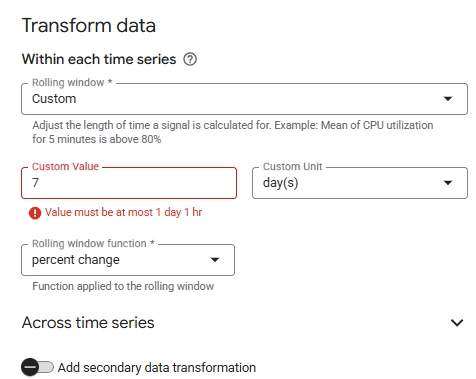

Currently, we’re managing multiple Feeds (log ingestion sources) in SecOps, and our goal is to detect issues such as ingestion failures or delays on a per-Feed basis as early as possible and trigger alerts accordingly.

At the moment, we haven’t decided on any specific implementation method. We’re considering various approaches, including built-in SecOps or Cloud Monitoring features, API integrations, or other cloud services, but we’re not sure which would be the most effective or practical.

If anyone has experience implementing Feed-level ingestion monitoring and alerting in Chronicle SecOps, I’d appreciate your recommendations, tips, or any lessons learned.

Thank you!