In modern security operations, integrating alerts and case data across platforms is essential for building a cohesive and responsive threat detection ecosystem. Google SecOps offers several methods to export alerts and cases to third-party SIEMs or SecOps platforms. Among the most preferred approaches is using the Chronicle REST API, which provides robust and flexible access to security telemetry.

However, in a recent implementation, I explored an alternative method that leverages Google Cloud Storage (GCS) to get alerts from one organization to another—particularly useful when SecOps is hosted in a separate Google Cloud organization.

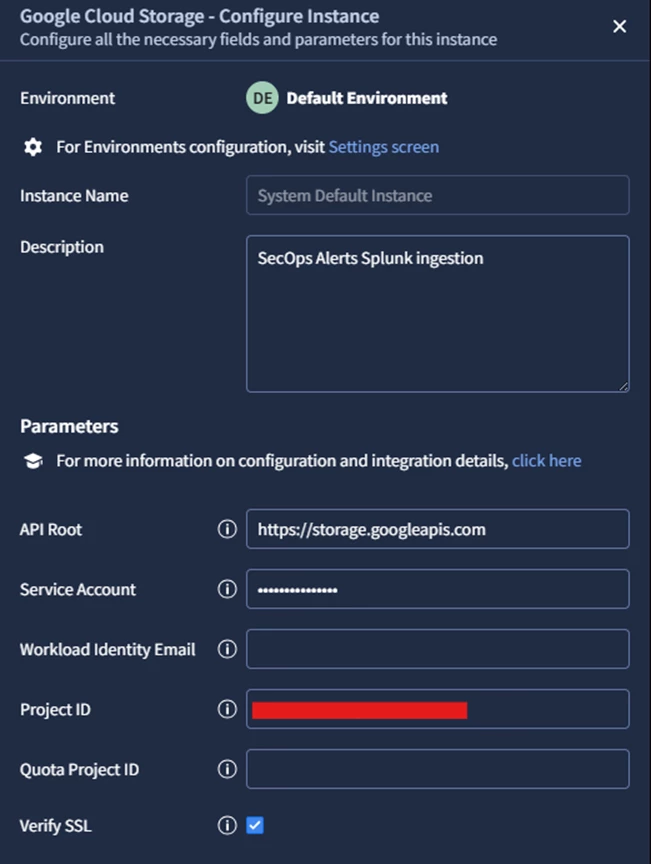

Step 1: Configure Google Cloud Storage Integration in SecOps

Google SecOps simplifies this setup through its Response Integrations. Under the integration named Google Cloud Storage, you’ll need to configure:

- API Root:

https://storage.googleapis.com - Service Account: Full JSON content of the service account with appropriate permissions

- Project ID: The GCP project where the target bucket is hosted

This configuration allows SecOps to interact with the bucket as a destination for alert data.

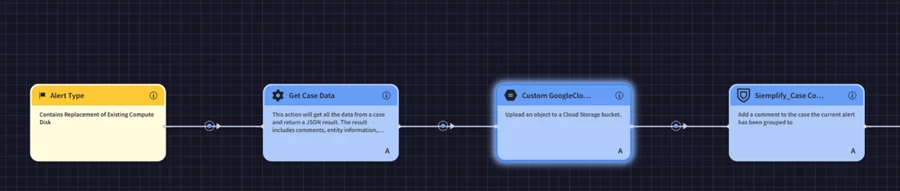

Step 2: Create a SOAR Playbook to Push Alerts to GCS

Once the integration is in place, the next step is to automate the alert ingestion using a playbook:

- Navigate to:

SecOps → Response → Playbooks - Create a New Playbook

- Add a Trigger:

- Choose "Alert Type"

- Set condition to match your alert of interest (e.g.,

"replacement of existing compute disk")

- Add an Action:

- Select "Get Case Data" from the Tools section to retrieve the alert's JSON payload

- Upload to Bucket:

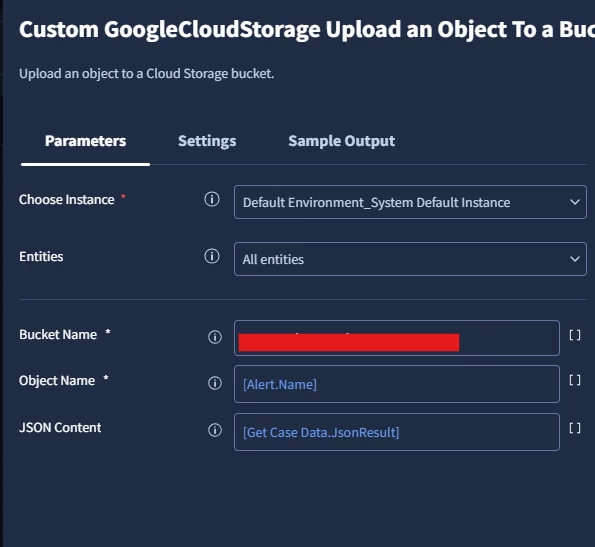

- Use the action "Upload an Object to a Bucket" from the GoogleCloudStorage integration

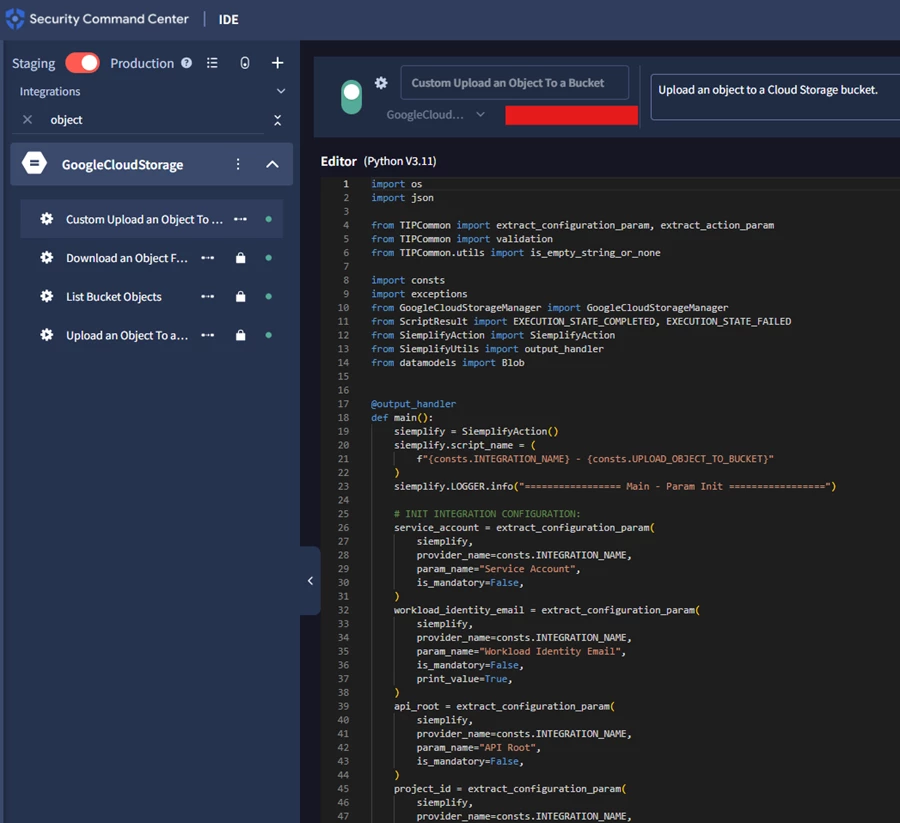

Challenge: Source File Path Requirement

At this point, a blocker arises—the default upload action requires a local file path, which is not available since it's the local path of the script execution. To overcome this, I customized the “Upload object to GCP Storage” action using IDE and Python.

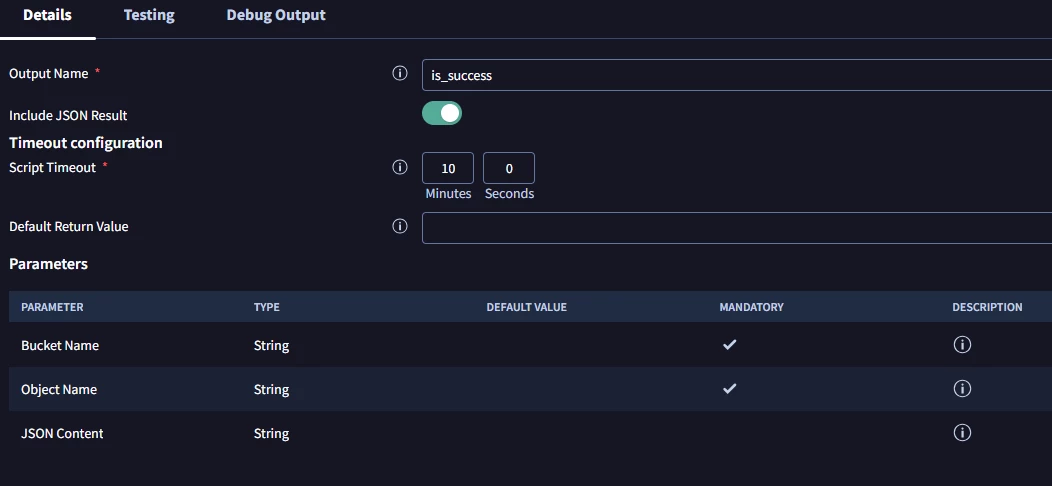

Step 3: Custom Python Action to Upload JSON Directly

Using the secops-wrapper and modifying the upload logic, I created a custom action that accepts JSON content directly instead of a file path.

Here’s a snippet of the modified Python code:

import os

import json

from TIPCommon import extract_configuration_param, extract_action_param

from TIPCommon import validation

from TIPCommon.utils import is_empty_string_or_none

import consts

import exceptions

from GoogleCloudStorageManager import GoogleCloudStorageManager

from ScriptResult import EXECUTION_STATE_COMPLETED, EXECUTION_STATE_FAILED

from SiemplifyAction import SiemplifyAction

from SiemplifyUtils import output_handler

from datamodels import Blob

@output_handler

def main():

siemplify = SiemplifyAction()

siemplify.script_name = (

f"{consts.INTEGRATION_NAME} - {consts.UPLOAD_OBJECT_TO_BUCKET}"

)

siemplify.LOGGER.info("================= Main - Param Init =================")

# INIT INTEGRATION CONFIGURATION:

service_account = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="Service Account",

is_mandatory=False,

)

workload_identity_email = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="Workload Identity Email",

is_mandatory=False,

print_value=True,

)

api_root = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="API Root",

is_mandatory=False,

)

project_id = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="Project ID",

is_mandatory=False,

print_value=True,

)

quota_project_id = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="Quota Project ID",

is_mandatory=False,

print_value=True,

)

verify_ssl = extract_configuration_param(

siemplify,

provider_name=consts.INTEGRATION_NAME,

param_name="Verify SSL",

input_type=bool,

print_value=True,

)

bucket_name = extract_action_param(

siemplify,

param_name="Bucket Name",

is_mandatory=True,

print_value=True,

input_type=str,

)

object_name = extract_action_param(

siemplify,

param_name="Object Name",

is_mandatory=True,

print_value=True,

input_type=str,

)

# REPLACED: This now expects the JSON content directly as a string.

json_content = extract_action_param(

siemplify,

param_name="JSON Content",

is_mandatory=True,

print_value=False, # Set to False to avoid logging sensitive data

input_type=str,

)

siemplify.LOGGER.info("----------------- Main - Started -----------------")

result_value = False

status = EXECUTION_STATE_COMPLETED

try:

validator = validation.ParameterValidator(siemplify)

if not is_empty_string_or_none(service_account):

service_account = validator.validate_json(

param_name="Service Account",

json_string=service_account,

print_value=False,

)

if not is_empty_string_or_none(workload_identity_email):

workload_identity_email = validator.validate_email(

param_name="Workload Identity Email",

email=workload_identity_email,

print_value=True,

)

manager = GoogleCloudStorageManager(

service_account=service_account,

workload_identity_email=workload_identity_email,

api_root=api_root,

project_id=project_id,

quota_project_id=quota_project_id,

verify_ssl=verify_ssl,

logger=siemplify.logger,

)

try:

siemplify.LOGGER.info(

f"Fetching bucket with name {bucket_name} from "

f"{consts.INTEGRATION_DISPLAY_NAME}"

)

bucket = manager.get_bucket(bucket_name=bucket_name)

siemplify.LOGGER.info(

f"Successfully fetched bucket from with name {bucket_name} from "

f"{consts.INTEGRATION_DISPLAY_NAME}"

)

except (

exceptions.GoogleCloudStorageNotFoundError,

exceptions.GoogleCloudStorageForbiddenError,

exceptions.GoogleCloudStorageBadRequestError,

) as exc:

raise exceptions.GoogleCloudStorageNotFoundError(

f"Bucket {bucket_name} Not found."

) from exc

bucket_google_obj = bucket.bucket_data

blob = bucket_google_obj.blob(object_name)

siemplify.LOGGER.info(f"Uploading JSON content to '{object_name}'")

# MODIFIED: Uploading the JSON string directly.

# The .upload_from_string() method is part of the google-cloud-storage library

# and is ideal for this use case.

blob.upload_from_string(json_content, content_type='application/json')

siemplify.LOGGER.info(

f"Successfully uploaded JSON content to '{object_name}'"

)

blob.reload()

created_blob = Blob(

id=blob.id, name=blob.name, md5_hash=blob.md5_hash, object_path=blob.path

)

siemplify.result.add_result_json(created_blob.as_json())

result_value = True

output_message = (

f"Successfully uploaded JSON content to bucket: {bucket_name} "

f"as object: {object_name}"

)

except (

exceptions.GoogleCloudStorageNotFoundError,

exceptions.GoogleCloudStorageValidationException,

) as error:

output_message = (

f"Action wasn't able to upload JSON content to "

f"{consts.INTEGRATION_DISPLAY_NAME}. Reason: {error}"

)

siemplify.LOGGER.error(

f"Action wasn't able to upload '{object_name}' to "

f"{consts.INTEGRATION_DISPLAY_NAME}. Reason: {error}"

)

siemplify.LOGGER.exception(error)

except Exception as error:

status = EXECUTION_STATE_FAILED

output_message = f"Error executing action '{consts.UPLOAD_OBJECT_TO_BUCKET}'. Reason: {error}"

siemplify.LOGGER.error(output_message)

siemplify.LOGGER.exception(error)

siemplify.LOGGER.info("----------------- Main - Finished -----------------")

siemplify.LOGGER.info(f"Status: {status}:")

siemplify.LOGGER.info(f"Result Value: {result_value}")

siemplify.LOGGER.info(f"Output Message: {output_message}")

siemplify.end(output_message, result_value, status)

if __name__ == "__main__":

main()

In the playbook, I replaced the "Source File Path" field with "JSON Content", and passed [Get Case Data.JsonResult] as the value.

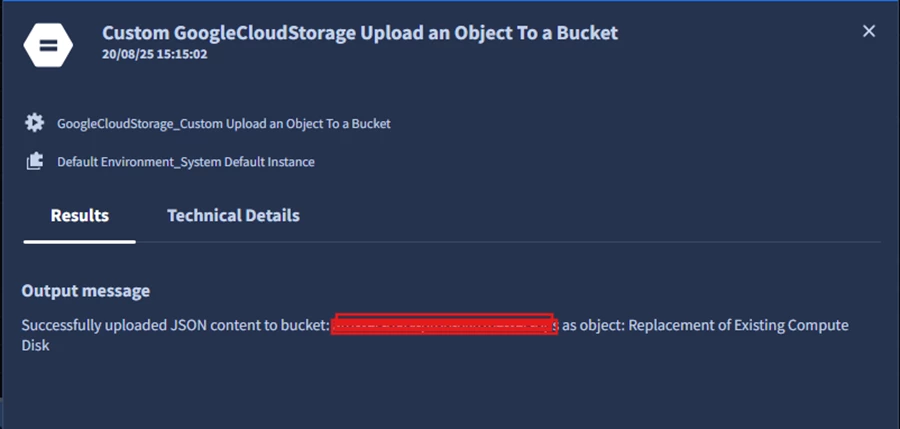

Final Step: Test and Validate

After saving the playbook and running a simulation, the alert was successfully pushed to the GCS bucket as an object. If you encounter errors, ensure:

- The service account has Storage Admin permissions

- VPC peering is correctly configured between organizations

Conclusion

This approach offers a flexible and scalable way to ingest Google SecOps alerts into external systems, especially when dealing with multi-org setups. By customizing the SOAR playbook and bypassing local file dependencies, you can streamline alert ingestion and enhance cross-platform visibility