Authors: Kashif Muhammad and Kyle Martinez

Security Operations Centers (SOCs) are often inundated with a relentless flood of security alerts. While a crucial component of any defense strategy, the sheer volume can quickly lead to "alert fatigue." Analysts spend valuable time sifting through numerous notifications, many of which turn out to be false positives, benign activity, or duplicates. This noise not only drains resources but also increases the risk of missing genuinely critical threats. Common culprits for this alert deluge include misconfigured systems, overly broad detection rule logic, or planned maintenance activities.

The key to reclaiming analyst focus and improving detection efficacy lies in intelligent alert suppression. This blog post details a solution to implement an analyst controlled alert suppression within Google SecOps by leveraging the power of playbooks and data tables.

The key components of the solution at a high level

This solution empowers security teams to automatically close or manage alerts based on predefined criteria stored and managed within Google SecOps data tables. The core components are:

- Google SecOps Data Tables: These tables act as a dynamic suppression list, storing information about alerts (i.e., specific entities within alerts from particular SIEM rules) that should be temporarily ignored or auto-closed.

- Google SecOps SOAR Playbooks: Automated workflows that:

- Check incoming alerts against the suppression data table.

- Allow analysts to easily add new suppression rules to the data table.

- SecOps SOAR Jobs:

- Scheduled job to remove expired alert suppression entries from the data table

How does alert suppression work in SOAR playbooks?

- Alert Ingestion: A new alert enters the Google SecOps SOAR platform.

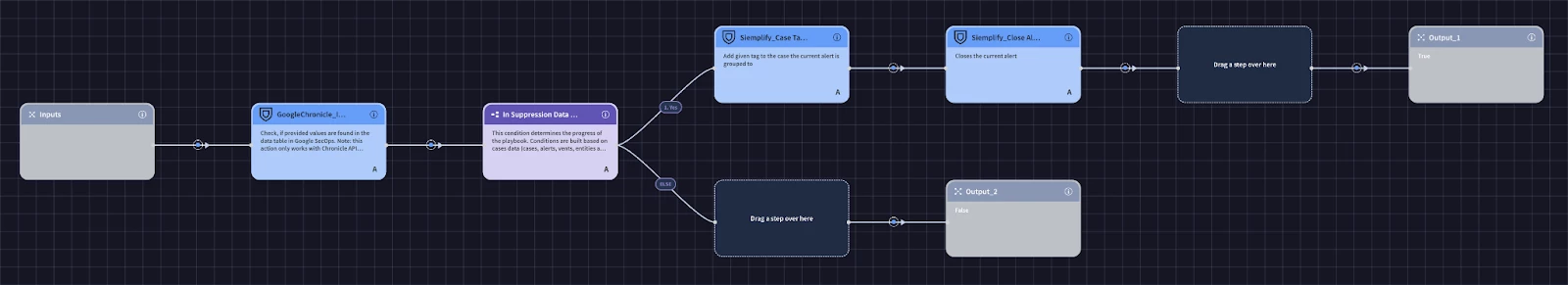

- Trigger Playbook: The alert suppression playbook (or a block within a broader playbook) is triggered (this block will need to be included in every alert playbook).

- 'Check for Suppression' Block:

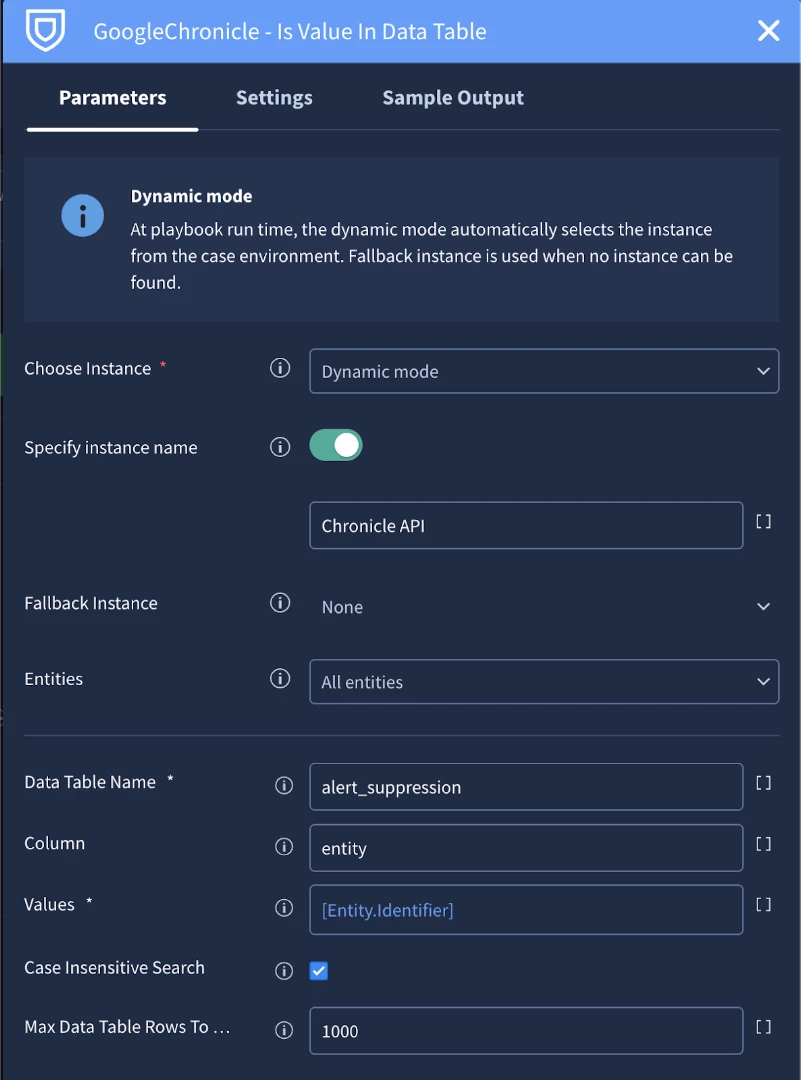

- The block queries the "Alert Suppression" data table using the Google Chronicle integration.

- It checks if any entity identifiers from the current alert (e.g., IP address, hostname, username) exist in the suppression table.

- It also verifies if the rule_id from the alert matches a rule_id in the suppression table for that entity.

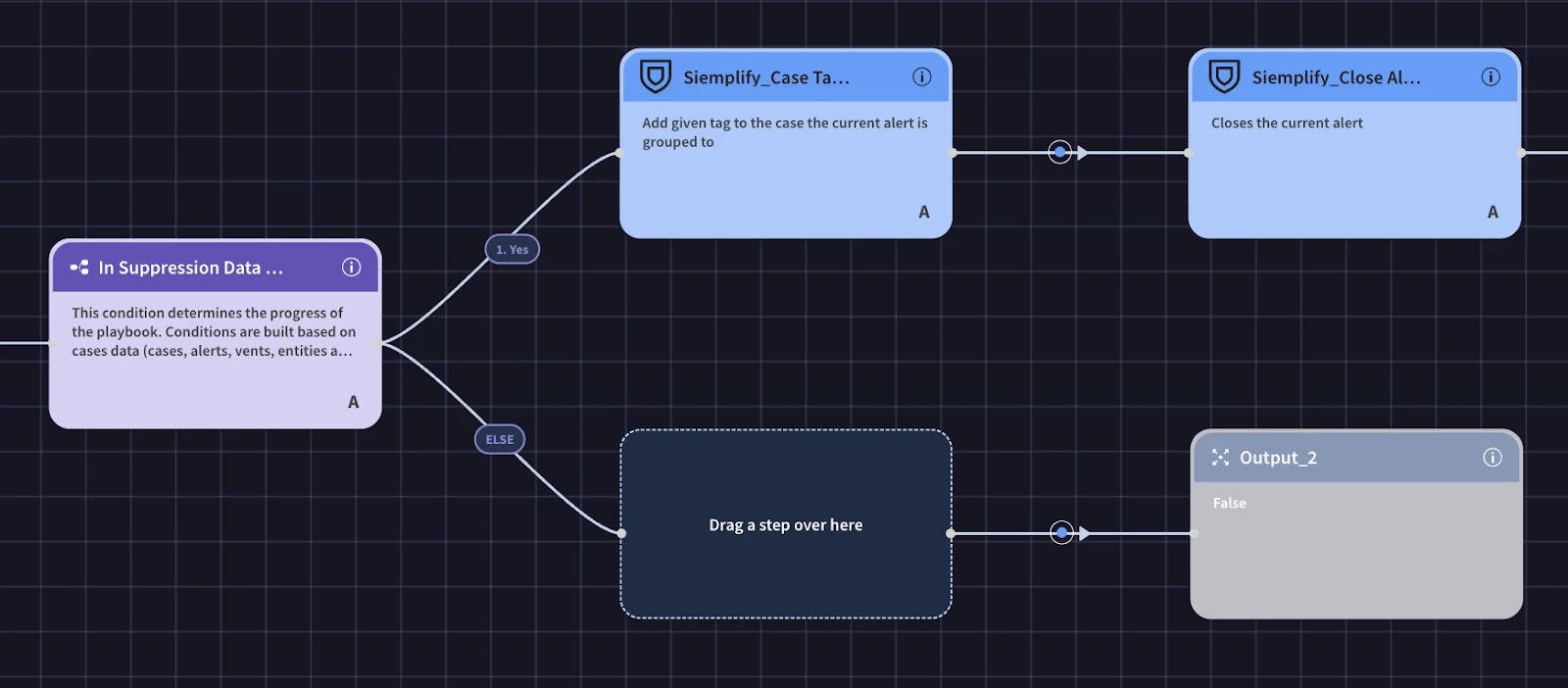

- Conditional Logic (Suppression Match Found?):

- IF YES (Alert matches an active suppression rule):

- The playbook adds an "Alert Suppression" tag to the case.

- The case is automatically closed

- IF NO (Alert does not match any suppression rule):

- The alert proceeds through the standard investigation and response workflow.

- An analyst might manually investigate this alert.

- IF YES (Alert matches an active suppression rule):

How do analysts add alerts to suppression tables ?

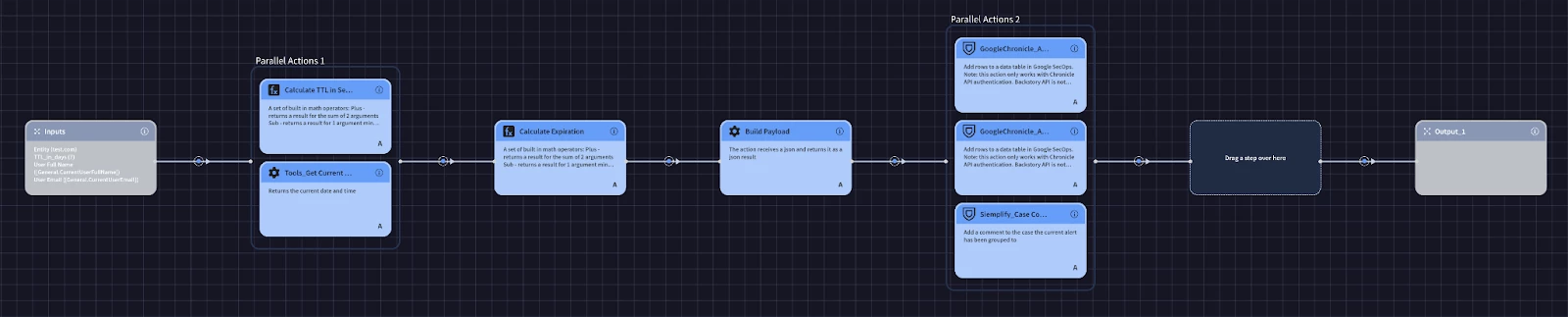

- Manual Suppression Option ('Add to Alert Suppression' Block):

- During a case investigation, if an analyst determines an alert is a false positive and should be suppressed in the future, they can manually trigger the 'Add to Alert Suppression' block from the alert view.

- This block prompts the analyst for details like the entity to suppress, the duration (TTL), and their identity.

- The block then writes a new entry to the "Alert Suppression" data table (and potentially a historical tracking table). The purpose of the tracking table is to maintain an audit trail of which entities/rule combinations were suppressed, and for what time periods (since the “active” suppression table is constantly updated, and entities added/removed, we cannot rely on it as a the authoritative source)

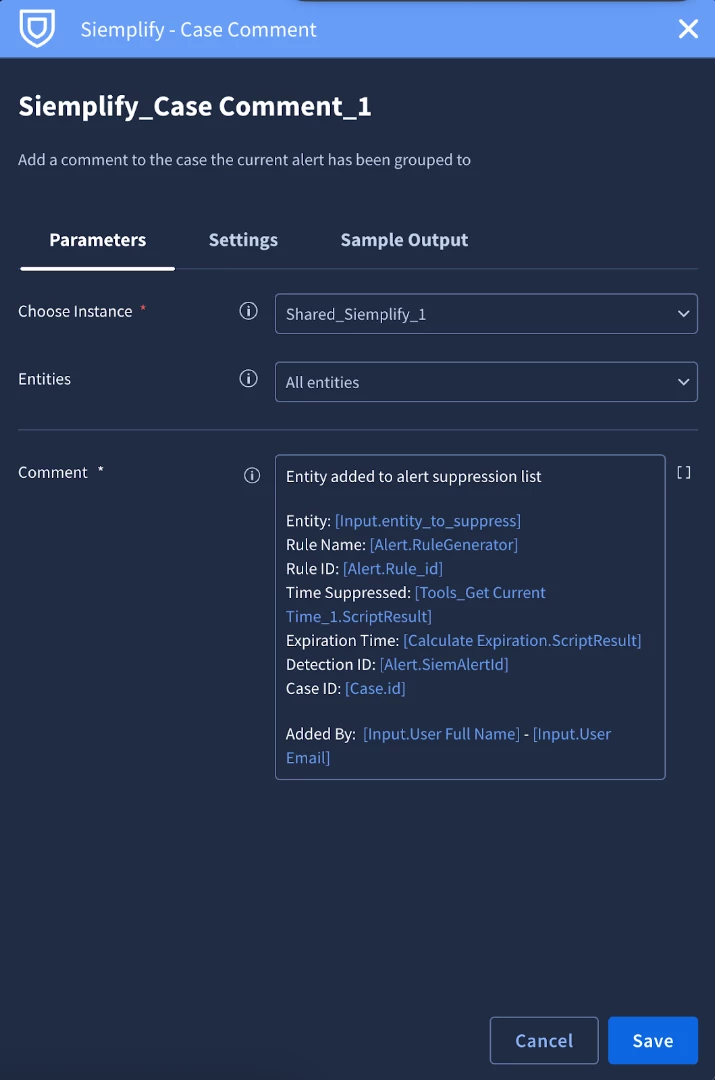

- A comment is added to the current case indicating the suppression action.

How do alerts get removed from suppression ?

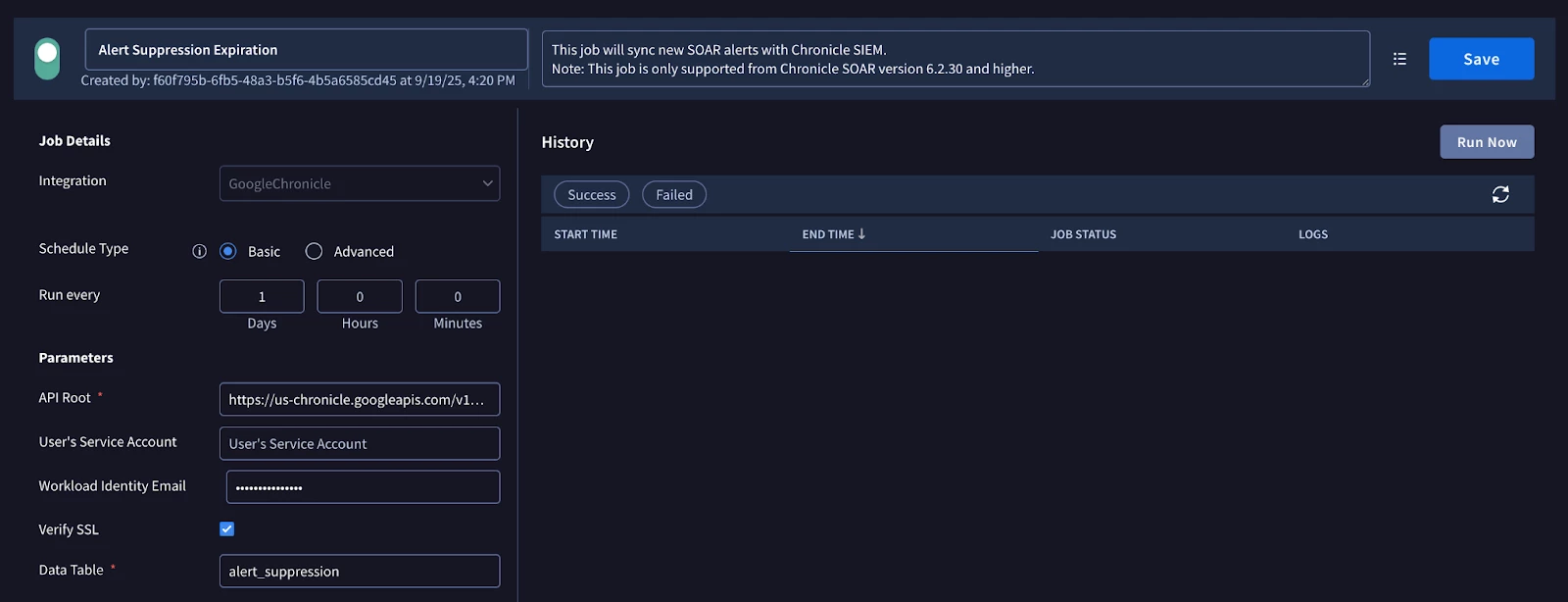

- A separate, scheduled Suppression Expiration job periodically queries the "Alert Suppression" data table.

- It compares the expiration_time of each entry with the current time.

- If an entry has expired, the job deletes it from the data table, ensuring suppressions are not permanent unless intended.

- A summary of deleted entries can be emailed to designated personnel.

Technical Deep Dive

Let's break down the implementation steps:

1. Creating the Data Tables:

Data tables are a powerful feature in Google SecOps that allow you to bring your own data into the platform for correlation and enrichment. For this solution, you'll typically need at least two:

- Alert Suppression Table: This is the primary table for active suppressions. The columns would be:

- entity (STRING): The identifier to suppress (e.g., IP, hostname, user).

- rule_name (STRING): The name of the SIEM detection rule generating the alert.

- rule_id (STRING): The unique ID of the detection rule.

- time_added (TIMESTAMP): When the suppression was added.

- expiration_time (TIMESTAMP): When the suppression should automatically be removed.

- detection_id (STRING): The ID of the detection that led to the suppression.

- case_id (STRING): The case ID associated with the initial suppression.

- Historical Suppression Tracking Table: To maintain an audit trail of all suppressions, even after they expire. This table might have a similar schema, perhaps with an additional field for "suppressed_by_user" or "reason.")

You can create and manage these tables via the Google SecOps UI under "Investigation > Data tables" or using the Chronicle API. Refer to the Google Cloud documentation on Data Tables and the community blog post on Getting Started with Data Tables for detailed instructions on creation, data import (e.g., via CSV), and defining column types.

2. Installing and Configuring the Google Chronicle Integration:

To enable your SOAR playbooks to interact with these data tables, you’ll need to ensure the Google Chronicle integration is configured to use the Chronicle API.

3. Utilizing Playbook Blocks:

There are two key playbook blocks:

- 'Check for Suppression': This block will be the workhorse of your automated suppression.

- Functionality: It takes alert details (like entity identifiers and rule ID) as input. It then uses an action from the Google Chronicle integration to query the "Alert Suppression Table." If a matching and active suppression entry is found, it can trigger actions like adding a tag to the case and closing it.

- Usage: This block should be added to the beginning of any playbook where you want to check for suppressions before proceeding with further investigation or enrichment.

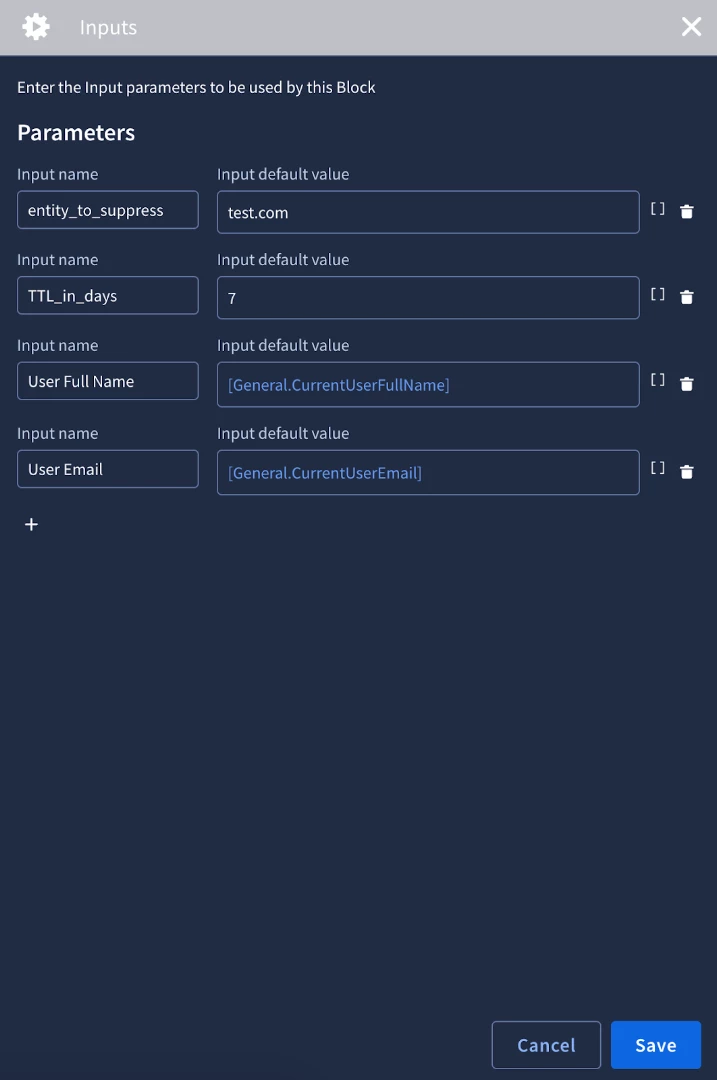

- 'Add to Alert Suppression': This block facilitates manual suppression by analysts.

- Functionality: It will prompt the user for:

- The entity to suppress.

- The TTL_in_days (Time To Live) for how long the suppression should be active. The block will then calculate the expiration_time.

- User details (full name, email) for auditing who initiated the suppression.

- It then constructs a new row and uses an action from the custom data table integration to write this row to both the "Alert Suppression Table" and the "Historical Suppression Tracking Table."

- It should also add a comment to the current case detailing the suppression action.

- Usage: This block can be run manually (from Alert View) by an analyst on an alert they've identified as a false positive or otherwise suitable for suppression.

- Functionality: It will prompt the user for:

4. Implementing the Alert Suppression Expiration Job:

To ensure suppressions don't last indefinitely without review, a cleanup mechanism is essential.

- Functionality:.

- This job queries all rows in the "Alert Suppression Table."

- For each row, it compares the expiration_time with the current timestamp.

- If current_time > expiration_time, the job deletes the row from the "Alert Suppression Table."

Example script:

from SiemplifyDataModel import SyncCaseIdMatch

from SiemplifyJob import SiemplifyJob

from SiemplifyUtils import output_handler

import time

from TIPCommon.extraction import extract_job_param

from GoogleChronicleManagerV2 import GoogleChronicleManagerV2

@output_handler

def main():

siemplify = SiemplifyJob()

siemplify.script_name = "Alert Suppression Expiration"

siemplify.LOGGER.info("--------------- JOB STARTED ---------------")

api_root = extract_job_param(

siemplify=siemplify, param_name="API Root", is_mandatory=True, print_value=True

)

creds = extract_job_param(

siemplify=siemplify,

param_name="User's Service Account",

print_value=False,

remove_whitespaces=False,

)

workload_identity_email = extract_job_param(

siemplify=siemplify,

param_name="Workload Identity Email",

print_value=False,

)

verify_ssl = extract_job_param(

siemplify=siemplify,

param_name="Verify SSL",

default_value=True,

input_type=bool,

print_value=True,

)

data_table_name = extract_job_param(

siemplify=siemplify,

param_name="Data Table",

default_value="alert_suppression",

input_type=str,

print_value=True,

)

siemplify.LOGGER.info("----------------- Main - Started -----------------")

try:

manager = GoogleChronicleManagerV2.create_manager_instance(

user_service_account=creds,

workload_identity_email=workload_identity_email,

chronicle_soar=siemplify,

api_root=api_root,

verify_ssl=verify_ssl,

)

siemplify.LOGGER.info("--- Alert Suppression Cleanup Job ---")

data_table = manager.data_table_details(data_table_identifier=data_table_name, expanded_rows=True, action_name="GetDataTables")

data_table = data_table.to_json()

current_time = int(round(time.time()))

if data_table['rows']:

for row in data_table['rows']:

row_id = row['name'].split("/")[-1]

if int(row['values']['expiration_time']) < current_time:

try:

manager.delete_data_table_row(data_table_name, row_id)

siemplify.LOGGER.info(f"Successfully removed row '{row_id}'")

except Exception as e:

siemplify.LOGGER.error(f"Failed to remove row '{row_id}'. Error: {e}")

siemplify.LOGGER.info("--------------- JOB FINISHED ---------------")

except Exception as error:

siemplify.LOGGER.error(f"Got exception on main handler. Error: {error}")

siemplify.LOGGER.exception(error)

raise

siemplify.end_script()

if __name__ == "__main__":

main()

- Configuration:

- Navigate to "Response > Jobs Scheduler" (or similar) in Google SecOps SOAR.

- Create a new job, selecting the newly created job.

- Schedule the job to run at a suitable interval (e.g., daily).

- Configure any necessary parameters, such as API root and data table name.

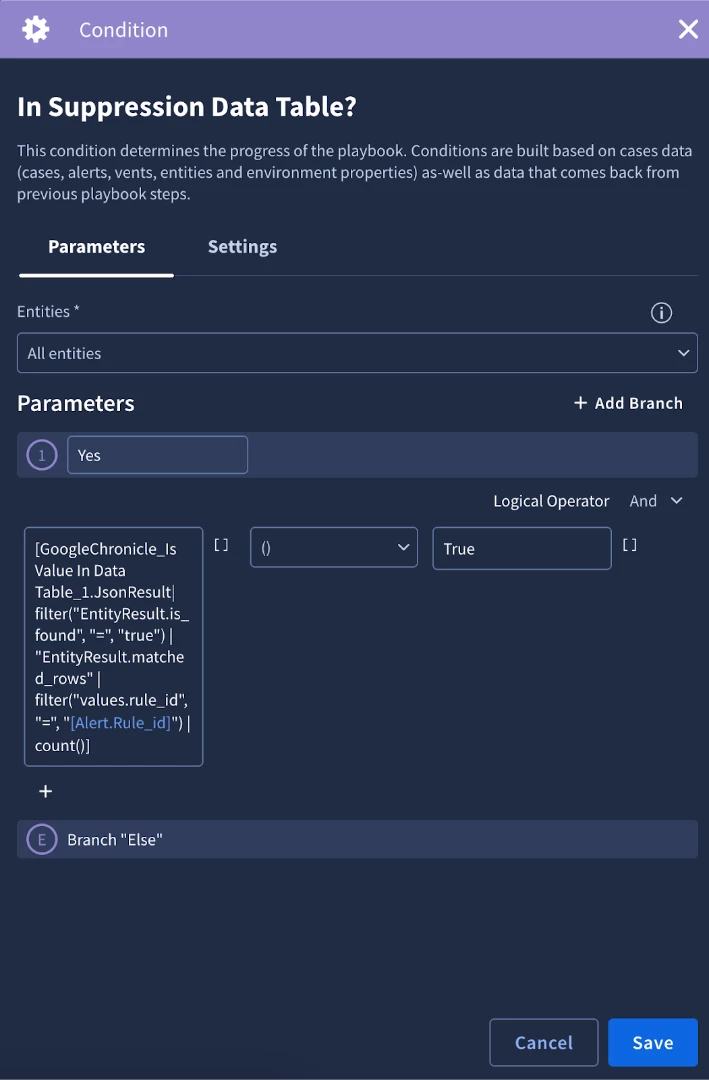

How Checking for Suppression Works (Inside the ‘Check for Suppression’ Block):

- The first action is the ‘Is Value in Data Table’ action from the Chronicle integration. We will use it to check to see if any of the entities from the current alert are in the data table

- Next, we will use a the following placeholder in a condition to check to see if an entity was found in the data table with the same rule id as the current alert:

[GoogleChronicle_Is Value In Data Table_1.JsonResult| filter("EntityResult.is_found", "=", "true") | "EntityResult.matched_rows" | filter("values.rule_id", "=", "[Alert.Rule_id]") | count()]

- If the condition evaluates to true, then the block logic will add a tag ‘Alert Suppressed’ and close the alert. If it does not evaluate to true, then the block completes and the alert will not be suppressed.

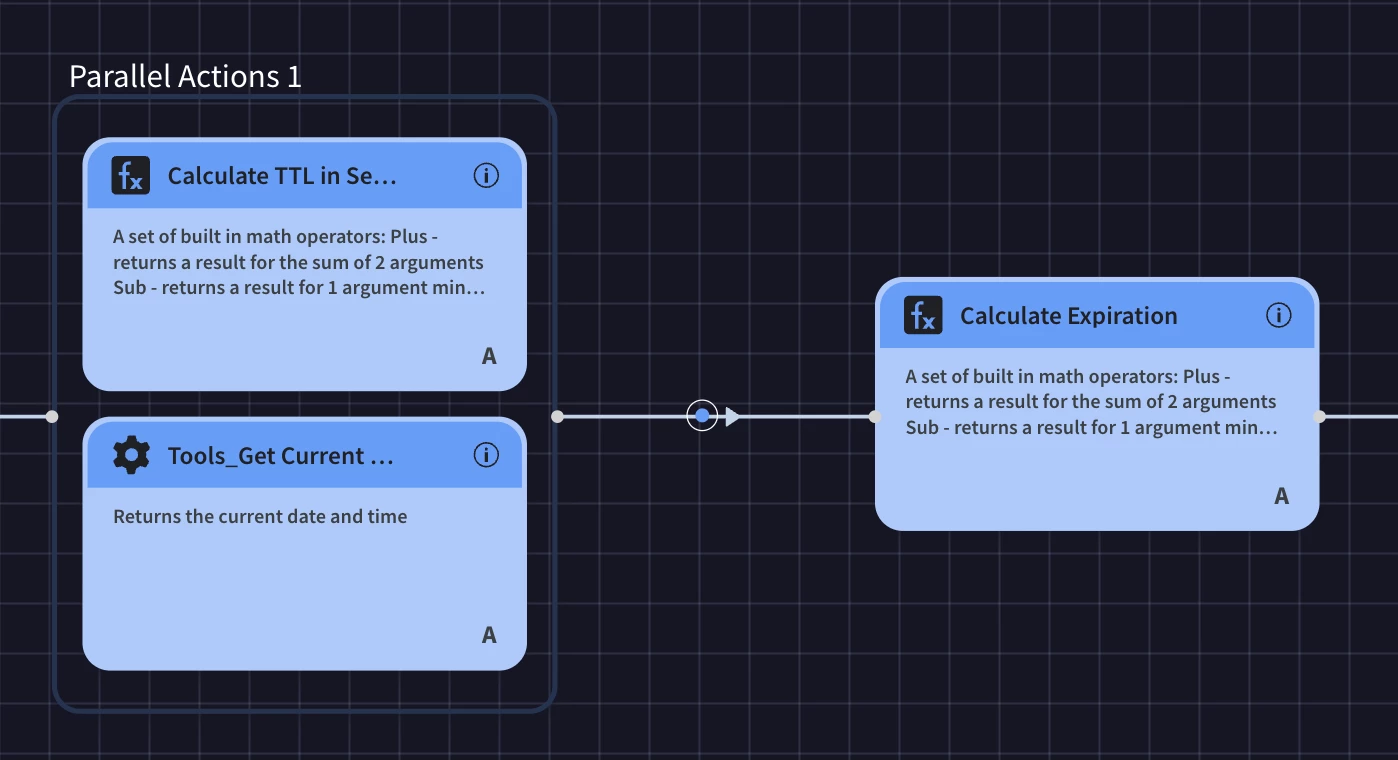

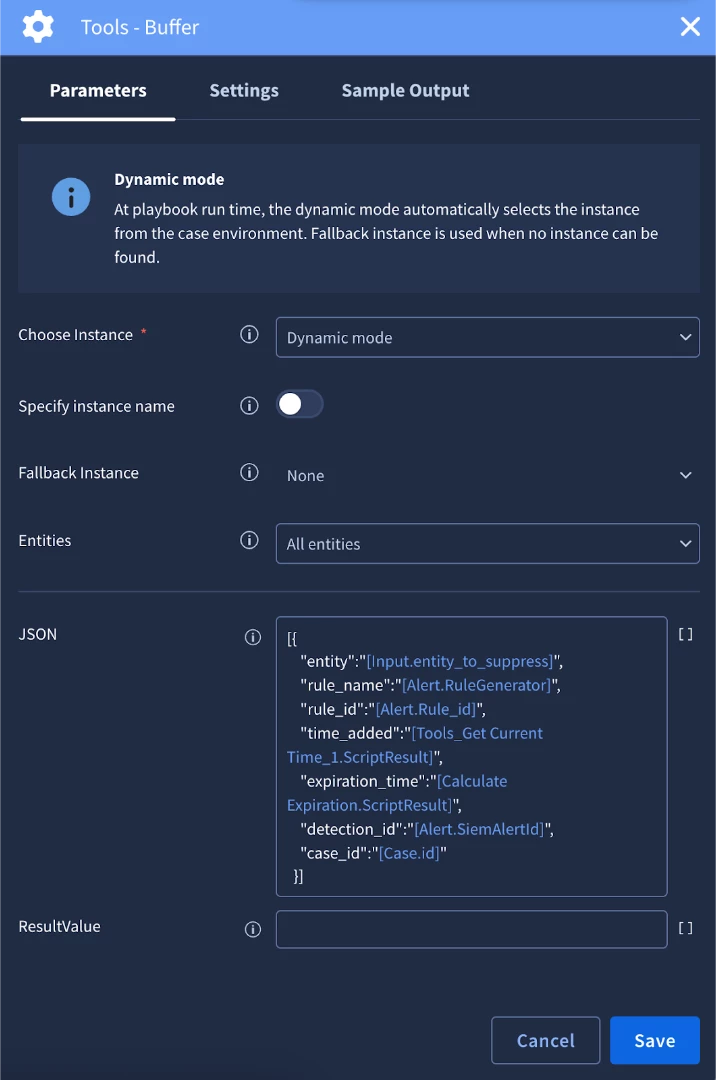

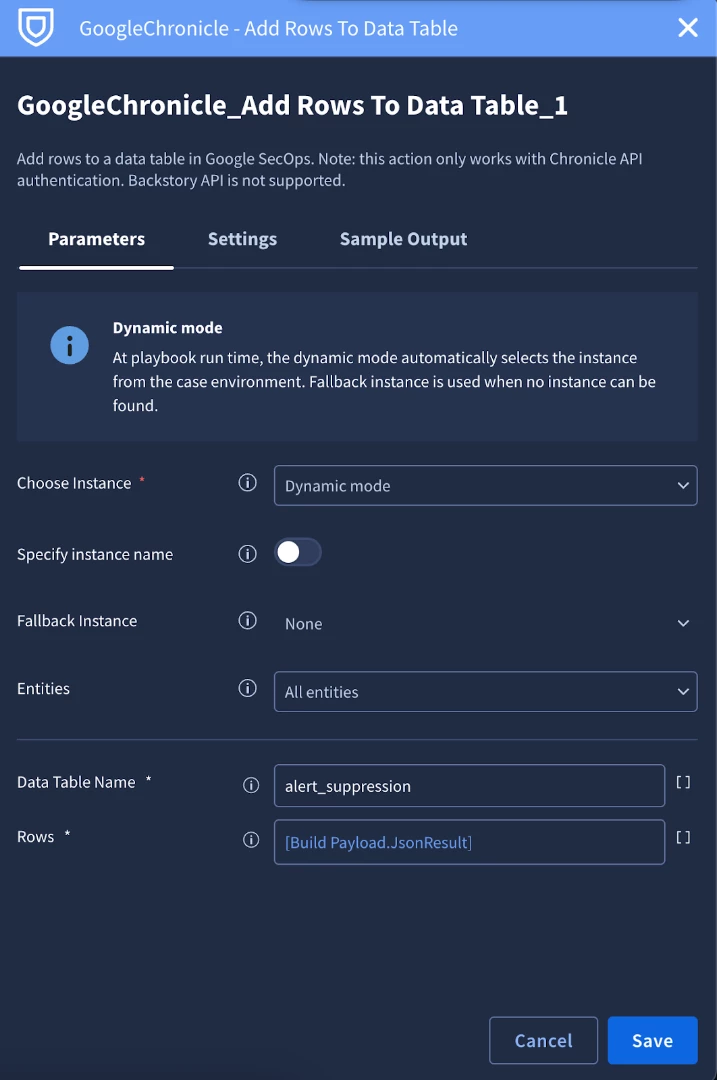

How Adding to the Data Table Works (Inside the 'Add to Alert Suppression' Block):

- The block receives inputs: entity_to_suppress, TTL_in_days, user_full_name, user_email.

- It captures other relevant details from the current alert/case: rule_name, rule_id, detection_id, case_id.

- It calculates time_added (current timestamp) and expiration_time (current timestamp + TTL_in_days).

- It builds a payload (e.g., a JSON object) representing the new row with all these fields.

- It uses Add Rows to Data Table action from the Google Chronicle integration to insert this payload as a new row into the "Alert Suppression Table."

- It can also insert a similar row into the "Historical Suppression Tracking Table."

- It adds a comment to the case.

Summary

By combining the strengths of Google SecOps SOAR playbooks with the flexibility of data tables, organizations can now build a powerful and adaptable alert suppression system. This allows SOCs to move from being reactive to a flood of alerts to proactively managing them, ultimately strengthening their overall security posture. Remember to tailor the data table columns, playbook logic, and integration parameters to fit the specific needs and nuances of your environment.