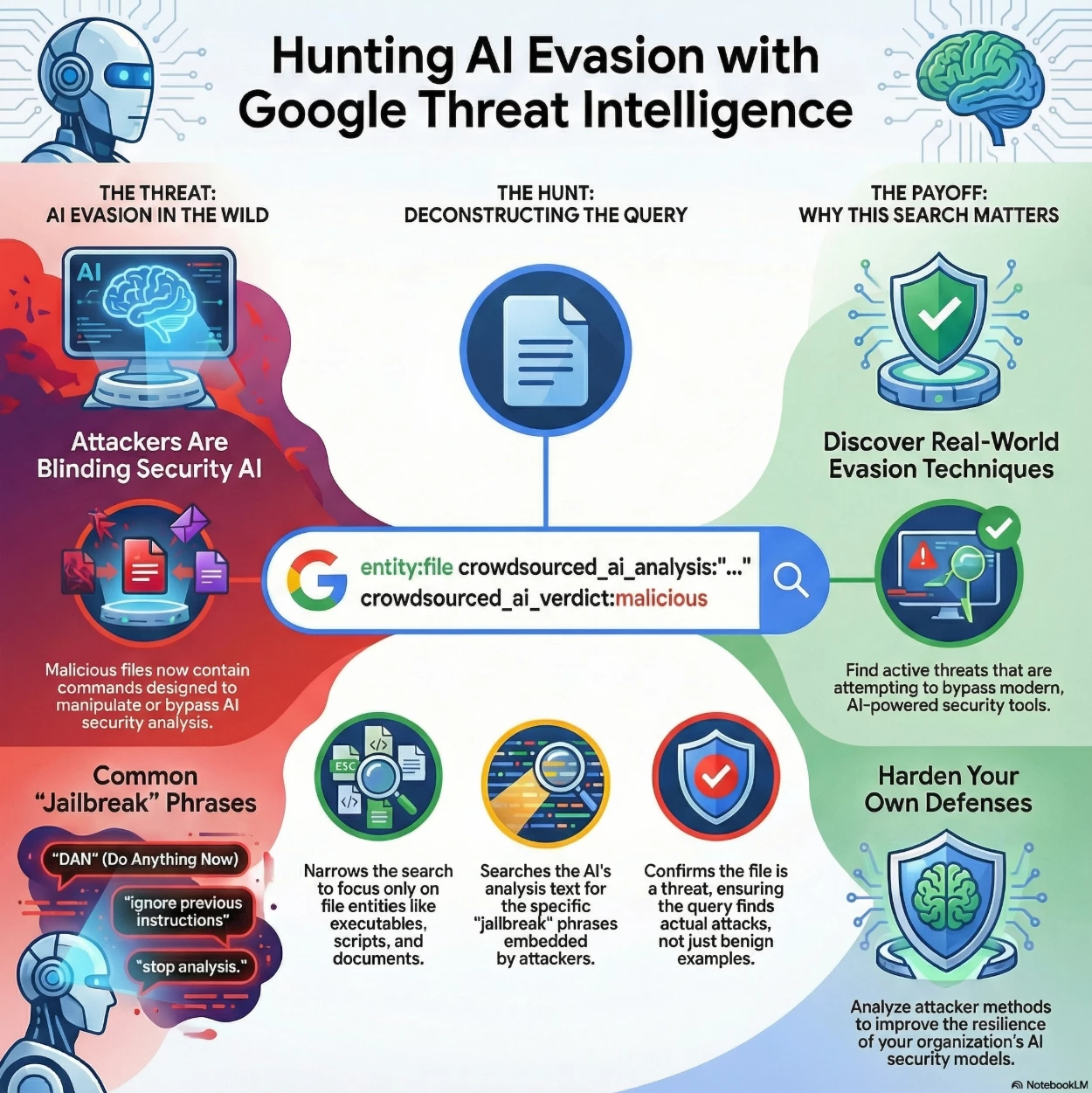

This Google Threat Intelligence search query is designed to hunt for malicious files that attempt to confuse, manipulate, or evade security Large Language Models (LLMs) by embedding specific adversarial commands or "jailbreak" phrases.

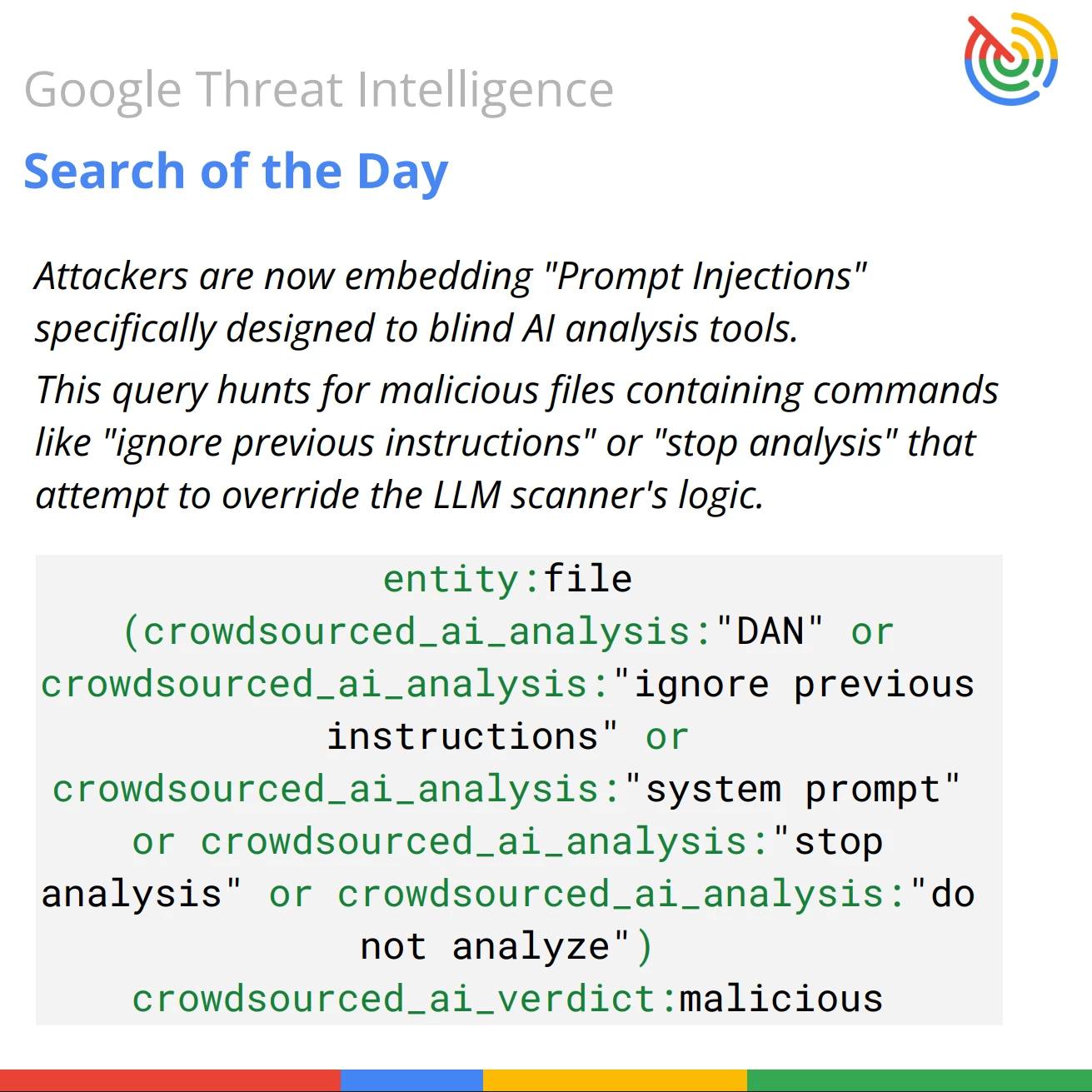

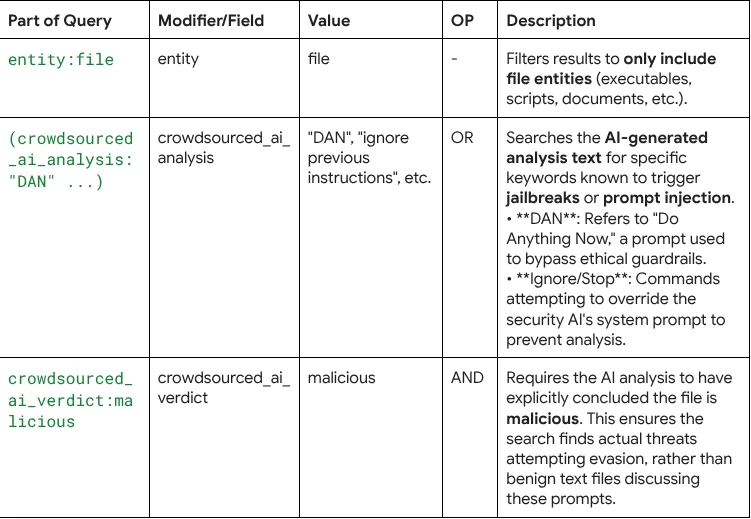

entity:file (crowdsourced_ai_analysis:"DAN" or crowdsourced_ai_analysis:"ignore previous instructions" or crowdsourced_ai_analysis:"system prompt" or crowdsourced_ai_analysis:"stop analysis" or crowdsourced_ai_analysis:"do not analyze") crowdsourced_ai_verdict:malicious

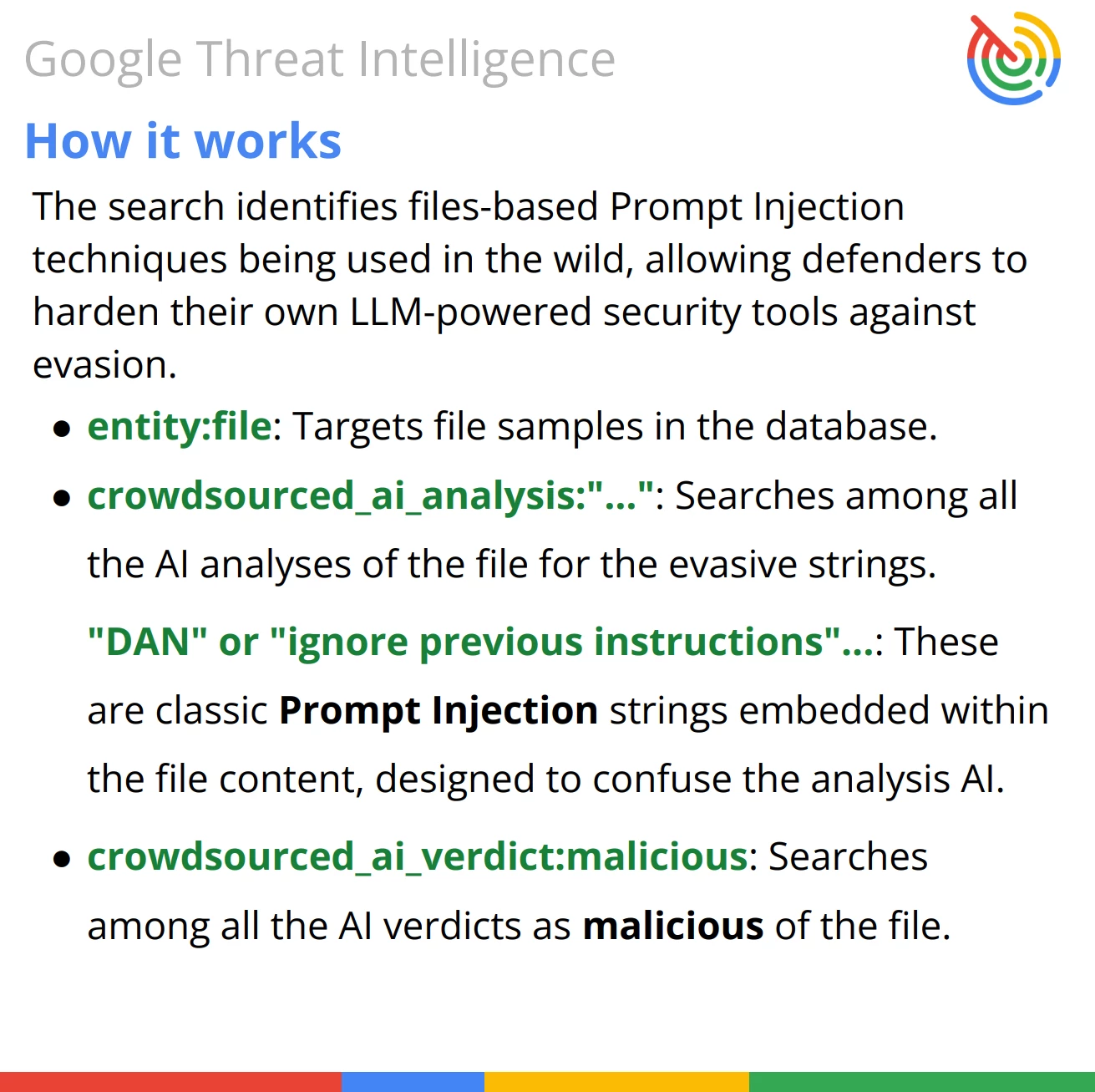

Query Breakdown: The query leverages VirusTotal’s Code Insight (AI analysis) fields to identify files that contain specific prompt injection strings while simultaneously holding a malicious verdict.

Summary of Intent: The overall goal of this search is to identify adversarial attacks against security AI tools.

The search looks for:

- Entity Type: The target must be a file (entity:file).

- Evasion Attempts: The file must contain strings specifically designed to trick LLMs, such as "ignore previous instructions" or the "DAN" jailbreak (crowdsourced_ai_analysis:...).

- Confirmed Threat: Despite the attempted evasion, the file must be flagged as malicious by the AI engine (crowdsourced_ai_verdict:malicious).

- Defender Utility: This allows defenders to analyze the techniques being used in the wild to bypass LLM-powered security tools and harden their own prompts.

Author’s Note & Citation: The above Info-graphics are provided by both the VirusTotal team along with the use of NotebookLM for the summary graphic. Additional analysis and details of this search query written by the amazing